Activity

Mon

Wed

Fri

Sun

Oct

Nov

Dec

Jan

Feb

Mar

Apr

May

Jun

Jul

Aug

Sep

What is this?

Less

More

Memberships

AI AUTOMATION INSIDERS

1.5k members • Free

Lead Generation Insiders 🧲

1.5k members • $1,497

Lead Generation Secrets

19.7k members • Free

Million a Month Method (Free)

1.2k members • Free

Deal Maker Fast Track

3.4k members • Free

Blue-Collar Biz (Free)

5.2k members • Free

Vacarya Arbitrage Alliance

3.3k members • Free

InsightAI Academy

12.2k members • Free

AI Automation (A-Z)

99.7k members • Free

32 contributions to Assistable.ai

update_user_details not working when forwarding GHL Number to Assistable Number

Ticket 2201. I submitted a bug on help.assistable.ai went through a long multiday chat to end up with the live agent letting me know they would escalate this to you @Jorden Williams. This was 6 days ago and I have followed up many times with no one answering. Finally got a response yesterday that they were re-escalating but no timeline or response for an update today. That's the only reason I am bringing this here. The problem: update_user_details is not working when fowarding a GHL number to assistable number. The assistant collects all the info on the call but does not lodge it into GHL. As a result the assistant is not able to book in appointments and says it will follow up later with the user. Jorden can you please look into this?

0 likes • 1d

@Brandon Duncan The problem with booking the calendar is directly related to the information collection though. The reason it is not able to book into the calendar is that the tool is not working properly and filling in the data. This only happens when the call is forwarded from a ghl number to the assistable number attached to the assistant. If I call the assistable number directly it works without a hitch.

update_user_details not working

I clearly state in my prompt when the bot should use the update_user_details tool but its not working on a specific bot. Could I get someone to take a look at it?

Appointment Booking Cadence - FIXED

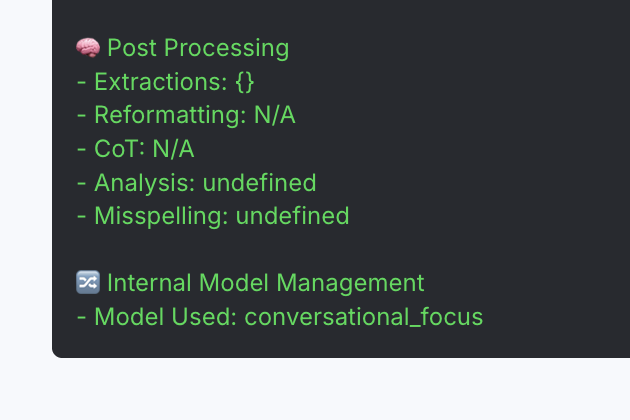

TLDR; We implemented a conversation analysis model to dynamically switch parameters to be more reliable in different conversational stages (code-switching if you will), along with re-enforcement in the tool call return to guide the AI through booking (multi-agent frameworks built in to the platform tools). Basic Post Mortem: - Sometimes LLMs update and their cadence changes a bit with context. To mitigate this, we engineered a dynamic model to change parameters and even prompt injection based on the state of the conversation. - In a nutshell, we run a basic analysis of your conversation, decide where in the conversation it is, and change the model, prompt injection instructions and re-enforcement feedback differently (conversational, appointment booking, faq, etc.) Let me know if that works betta for you and im back in office - glad to see everyone again :)

Voice Knowledge + RAG Update, General Chat improvements & Automatic Custom Field Extractions

Voice KB beta is live & enable your voice knowledge bases via the knowledge base tab General RAG improvements: - kb is now fed as training data actively throughout the conversation to give more deterministic answers in both chat and voice. - kb is now fed as a knowledge base context window as well ensuring your assistants are always in the know General Chat improvements: - Find fine-tuning on appointment booking, verbiage and more now embedded in our models in order to high the highest quality output and context - Injection update -- assistants are fed higher quality data to understand current date/time in your location's timezone Automatic Custom Field Extractions: - Automatically have your custom fields filled out on a per run basis on voice and chat. No need for custom tools, extraction tools or post call webhooks. We do all of the work for you and without fail.

Phone number locations

A few Q’s about the phone numbers. 1. When buying an LC number in GHL it gives you the city associated with that number. Is it possible to add that feature into assistable? I just bought a number for a client and it ended up being in a different city than they are located but the same area code. 2. It would be nice to know if it is in good standing or spam likely. When @Jorden Williams demoed the new interface I remember this being available. Can this be added in? 3. what do all of the new icons mean when looking at the phone selection window? I can infer some of them but an explanation when you hover over the icon would be nice.

0 likes • 27d

@Hari Prathap Balamurugan thanks for the answers. The problem with #2 is that you can’t see the standing of the phone number before buying it. That paired with not knowing what city the phone is located in means that you might have to buy a slew of numbers to get a number in the city that the business is located in that is also in good standing. Looking forward to the dev team improving this.

1-10 of 32