Activity

Mon

Wed

Fri

Sun

Mar

Apr

May

Jun

Jul

Aug

Sep

Oct

Nov

Dec

Jan

Feb

What is this?

Less

More

Memberships

145 contributions to Assistable.ai

Updates & Releases / Fixes / Patches

Have any questions? Text me (iMessages & SMS): 770-296-7271 Need white-gloved help RN? (I am in there too): help.assistable.ai Have anymore questions? Text me and I will make a video over: 770-296-7271 ----- where have i been? - Actively trying to fire myself. I think a long term worry internally, myself, and from the community is key man risk. My only objective is trying to make sure this outlives me. Im mad. - Me too. Lets figure it out together, reach me or team and lets make sure clients are super duper happy Why is X happening? - I explained in the vid along with solution - if you are super busy, text me and ill get you an answer in less than 5 mins What can I expect? - A platform that does rely on me or any vendors - something that we own in entirety and can be maintained by people much smarter than me X ? - Y A (aka text me or run it up to support and they will send to me) we are on call 24/7, ask anyone thats talked to me in the past week - I have slept collectively 6 hrs (not even in my bed :/ ), yelled at some people upstream, and gave every ounce of my time to help anyone down stream I miss making videos and being involved here. I want to do it more. Thats what we are trying to do here

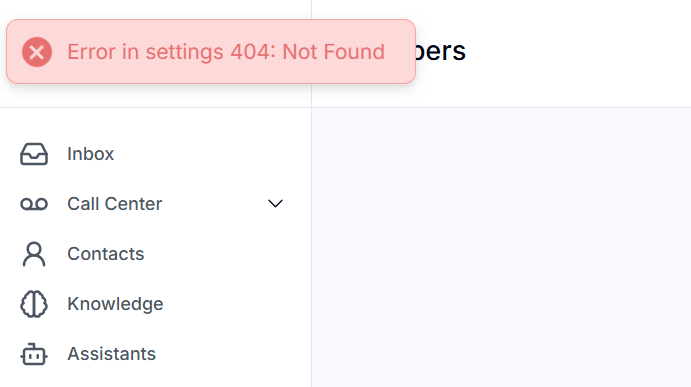

Error in settings 404:

Getting a weird error when trying to add an assistant to a brand new phone number. I bought another number just to make sure it wasn't the number and got the same error. Anyone else experience this?

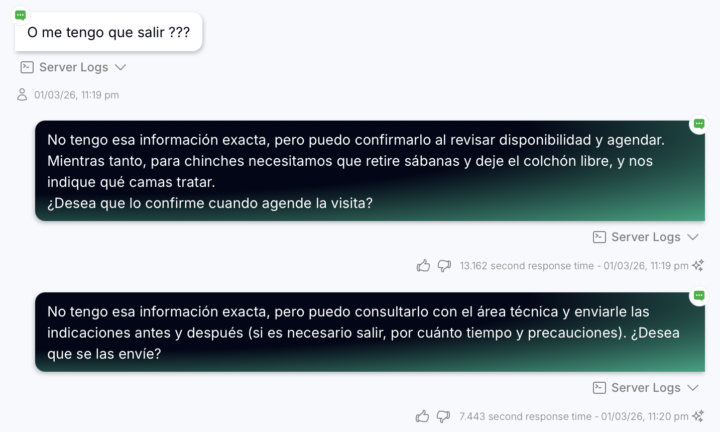

Double same message

Hi!, so im still learning assistable and im getting great results but sometimes it reply the same message two times and even three times in a row, i put the condition in the prompt (Not to repeat messages) What can i do in this case?

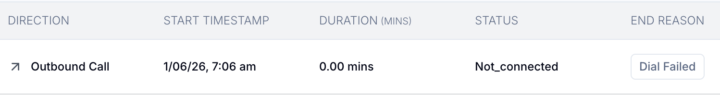

Dial failed

Hey all, hope the new year is treating everyone well 😀 Got a technical question, i've setup my outbound system everything seems good to go for for some reason i cant get the calls to actually connect/dial. Ive tried multiple numbers and have consumed as much assistables content as humanly possible and i still cant find the issue, i've gone through all the motions simplifying ghl workflow to literally one node, gone through all of the twillio settings etc nothing seems to work. If anyone has any insight into this it would very much appreciated. Cheers 🙏

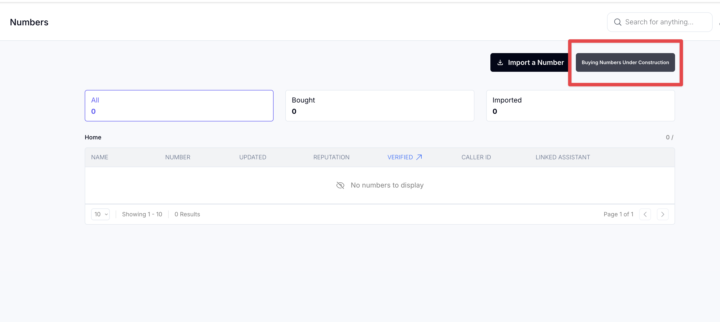

Not able to buy New numbers

Have not been able to buy new numbers. When will this open up again? Any ETA on this?

1-10 of 145

@prathap-balamurugan-9582

Assistable Skool Group Moderator & Support Team Member | AI Agent Builder | Expert in Assistable AI & GoHighLevel | Helping members through support.

Active 1d ago

Joined Aug 14, 2025

Powered by