Write something

Pinned

🌍 Alignment Without Hand-Waving: Ethics as a Daily Practice

AI alignment often gets discussed at the level of civilization, existential risk, and saving humanity. That concern is understandable, and it matters. But if we only talk about alignment as a distant research problem, we miss the alignment work we can do right now, inside our teams, products, and daily decisions. In our world, alignment is not a theory. It is a practice. Ethics is not a poster on a wall. It is a set of repeatable behaviors that shape what AI does, what we allow it to touch, and how we respond when it gets things wrong. ------------- Context: Why This Conversation Keeps Getting Stuck ------------- When someone asks for tips on alignment and ethics, two unhelpful things often happen. Some people dismiss the concern as hype or doom, because it feels abstract. Others lean into fear, because it feels big and uncontrollable. Both reactions make it harder to do the real work. The reality is that there are two layers of alignment. One is frontier alignment, the long-horizon research that tries to ensure increasingly powerful models remain safe and controllable in the broadest sense. Most of us are not directly shaping that layer day to day, although it is important and worthy of serious work. The other layer is operational alignment, which is how we align AI systems with our intent, our values, our policies, and our responsibility in real workplaces. This layer is not abstract at all. It is the difference between a team that adopts AI with confidence and a team that adopts AI with accidental harm. We do not have to choose between caring about humanity-level questions and being practical. We can hold both. In fact, operational alignment is one of the most optimistic things we can do, because it builds the organizational muscle of responsibility. It turns concern into competence. ------------- Insight 1: Alignment Starts With Intent, Not Capability ------------- A lot of ethical trouble begins with a simple mistake, we adopt AI because it can do something, not because we have clearly decided what it should do.

Pinned

The Difference Between Grinding… and Living on Purpose

I was up at 4:35am this morning… on a Sunday… diving head first into work. And the truth is — it didn’t feel like grinding at all. Because when you love what you’re building, when you know it’s stretching you as a man, when it’s tied to being in service to your family and making a real impact… the work hits different. It stops feeling like pressure. It starts feeling like purpose. I don’t get excited about being busy. I get excited about growing. About becoming more disciplined. More focused. More capable than I was yesterday. That’s what fuels me. Not the hours. Not the grind. The progress. So if you’re in a season where you’re putting in the reps — don’t just ask yourself how hard you’re working. Ask yourself who the work is helping you become. Because when the mission is bigger than you…even a 4:35am Sunday start feels like a privilege.

Pinned

Claude Interactive Responses, ChatGPT Ads Explained & More AI News You Can Use

This week, I show you how to use Claude's new Interactive Reponses, breaks down AI ads at the Superb Owl and how ads in ChatGPT work (for now), reviews our testing results comparing GPT-5.3-codex and Claude Opus 4.6, and way more. Enjoy!

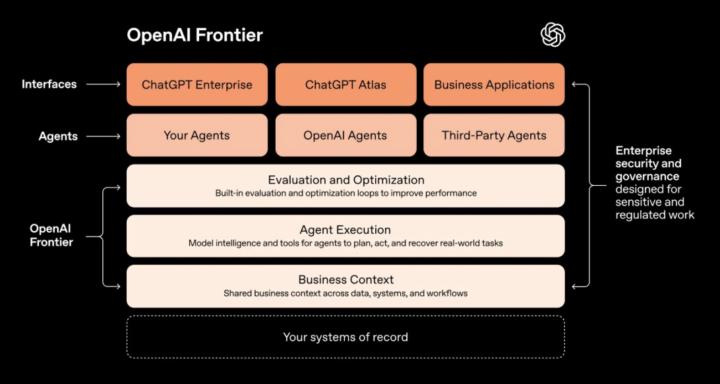

📰 AI News: OpenAI Launches “Frontier” To Deploy AI Agents Across Your Business

📝 TL;DR OpenAI just introduced Frontier, a new enterprise platform designed to build, deploy, and manage AI agents that can do real work across company systems. This is OpenAI moving from “chat with AI” to “run workflows with AI,” with governance and permissions baked in. 🧠 Overview Frontier is aimed at companies that keep getting stuck in AI pilots, where one team has a cool bot, but nothing scales across the org. OpenAI’s pitch is that agents need the same things employees need, shared context, onboarding, feedback loops, and clear boundaries. If you have ever thought “AI is useful, but it is messy to roll out safely,” Frontier is meant to be the missing layer between models and real business operations. 📜 The Announcement OpenAI has launched Frontier as an enterprise platform for building and running AI agents inside real workflows. The focus is on two big buckets, AI teammates that support individual roles and teams, and automated business processes that can run end to end across systems of record. The platform is designed to integrate with existing software and data rather than forcing companies into a new stack. OpenAI also emphasizes that Frontier can work with third party agents, not only OpenAI built ones, and that it includes enterprise grade identity, permissions, and observability. ⚙️ How It Works • AI teammates - Role based agents that help individuals and teams with work like analysis, forecasting, research, and software tasks, grounded in your business context. • Business process automation - Agents can run end to end workflows across tools, reducing cycle time for functions like revenue ops, support, and procurement. • Shared context layer - Agents can reference approved company knowledge so outputs are tied to real policies, data, and playbooks, not generic advice. • Onboarding and feedback loops - You can teach agents how work is done in your org and improve quality over time based on review and outcomes.

0

0

📰 AI News: UK Moves To Put AI Chatbots Under Child Online Safety Laws

📝 TL;DR The UK is moving to close a major loophole in online safety law by bringing AI chatbots under the Online Safety Act. The message from Downing Street is simple, tech is moving fast, and child safety rules need to move faster. 🧠 Overview UK Prime Minister Sir Keir Starmer has pledged faster action to tighten laws designed to protect children online. The headline change is that AI chatbots, which have grown rapidly but sit in a gray area, would be pulled into the Online Safety Act’s enforcement framework. Alongside that, the government is also backing new proposals around preserving a child’s phone data after a death, and it is facing political pressure to go further, including calls for Parliament to vote on an under 16 social media ban. 📜 The Announcement The government says it will close loopholes in existing online safety rules so that AI chatbot providers face similar illegal content duties as other online platforms. Starmer said Britain should aim to lead on online safety, arguing the law must keep pace with fast moving technology. New proposals also include a measure that would require tech companies to preserve all the data on a child’s phone if they die, so families and investigators are not left fighting for access. Critics argue the government has moved too slowly so far and want Parliament to be given a vote on a child social media ban, rather than leaving it as a consultation and policy process. ⚙️ How It Works • Closing the chatbot loophole - AI chatbots would be explicitly covered by the Online Safety Act’s duties around illegal content. • Faster response mechanism - The government wants the ability to update rules more quickly as new risks emerge, rather than waiting years for fresh legislation. • Preserving a child’s device data - Platforms and services would have to keep relevant data from a child’s phone if the child dies, supporting investigations and giving families clearer access.

1-30 of 11,444

skool.com/the-ai-advantage

Founded by Tony Robbins, Dean Graziosi & Igor Pogany - AI Advantage is your go-to hub to simplify AI and confidently unlock real & repeatable results

Powered by