Write something

Pinned

🧭 Why Collaboration With AI Requires Clear Human Intent

One of the most common frustrations with AI is the feeling that it does not quite understand what we want. The responses are close, but not right. Useful, but unfocused. Impressive, but misaligned. What we often label as an AI limitation is, more accurately, a signal about our own clarity. AI collaboration does not break down because the technology lacks intelligence. It breaks down because intent is missing. Without clear human intent, even the most capable systems struggle to deliver meaningful value. ------------- Context: When AI Feels Unreliable ------------- Many people approach AI by jumping straight into interaction. They open a tool, type a prompt, and wait to see what comes back. If the output misses the mark, the conclusion is often that the AI is unreliable, inconsistent, or not ready for real work. What is less often examined is the quality of the starting point. Vague goals, unspoken constraints, and half-formed questions are common. We know we want help, but we have not articulated what success actually looks like. In traditional tools, this ambiguity is sometimes tolerated. Software either works or it does not. AI behaves differently. It fills in gaps, makes assumptions, and extrapolates based on patterns. When intent is unclear, those assumptions can drift far from what we actually need. This creates a cycle of frustration. We ask loosely, receive loosely, and then blame the system for not reading our minds. The opportunity for collaboration gets lost before it really begins. ------------- Insight 1: AI Amplifies What We Bring ------------- AI does not generate value in isolation. It amplifies inputs. When we bring clarity, it amplifies clarity. When we bring confusion, it amplifies confusion. This is why two people can use the same tool and have radically different experiences. One sees insight and leverage. The other sees noise and inconsistency. The difference is rarely technical skill. It is intent. Intent acts as a filter. It tells the system what matters and what does not. Without it, AI produces breadth instead of relevance. With it, the same system can surface nuance, trade-offs, and direction.

Pinned

Why So Many People Feel Stuck Right Now (And How to Fix It)

Why so many people feel stuck right now isn’t because they’re lazy, weak, or broken. It’s because they’ve lost a compelling future. When you take away someone’s belief that tomorrow can be better, that their effort leads somewhere meaningful, you don’t just kill motivation. You kill hope. Napoleon Hill called this drifting. Living without a quest. No clear direction. No emotional pull. No reason to endure the hard days. Humans are wired to move toward something. A future worth sacrificing for. A vision that pulls you forward when life gets heavy. Without that, everything feels harder than it needs to be. Work feels pointless. Discomfort feels unbearable. Life starts to feel like something you’re just trying to survive. So here’s how you create a compelling future in a real, practical way. First, stop being vague. “More money” or “less stress” won’t pull you forward. Get specific. How do you wake up when life is working? Who are you with? What problems are gone? If you can’t feel it, it won’t move you. Second, decide who you need to become to live that future. More disciplined. More decisive. More honest. Less available to distractions. A compelling future isn’t just a destination. It’s an identity you’re growing into. Third, give yourself a 90-day quest. Drifting happens when time feels endless. Momentum shows up when time feels intentional. One focus. One target. One thing that proves you’re moving again. And finally, protect your optimism. This matters more than people think. If you live in cynicism, doom, and constant negativity, your future shrinks. Optimism isn’t naive. It’s a strategy. A compelling future doesn’t magically appear. You choose it. You design it. And you defend it. Question for you: what’s one thing about your future you’re choosing to be optimistic about again?

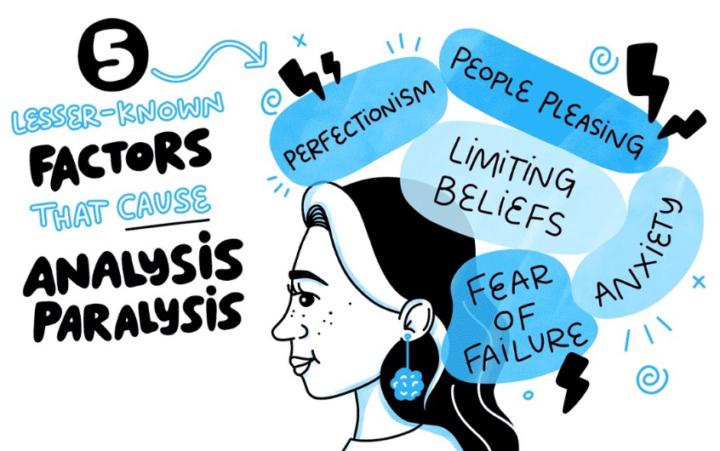

🧠 The Hidden Cost of Overthinking AI Instead of Using It

One of the most overlooked barriers to AI adoption is not fear, skepticism, or lack of access. It is overthinking. The habit of analyzing, preparing, and evaluating AI endlessly, while rarely engaging with it in practice. It feels responsible, even intelligent, but over time it quietly stalls learning and erodes confidence. ------------- Context: When Preparation Replaces Progress ------------- In many teams and organizations, AI is talked about constantly. Articles are shared, tools are compared, use cases are debated, and risks are examined from every angle. On the surface, this looks like thoughtful adoption. Underneath, it often masks a deeper hesitation to begin. Overthinking AI is socially acceptable. It sounds prudent to say we are still researching, still learning, still waiting for clarity. There is safety in staying theoretical. As long as AI remains an idea rather than a practice, we are not exposed to mistakes, limitations, or uncertainty. At an individual level, this shows up as consuming content without experimentation. Watching demos instead of trying workflows. Refining prompts in our heads instead of testing them in context. We convince ourselves we are getting ready, when in reality we are standing still. The cost of this pattern is subtle. Nothing breaks. No failure occurs. But learning never fully starts. And without practice, confidence has nowhere to grow. ------------- Insight 1: Thinking Feels Safer Than Acting ------------- Thinking gives us the illusion of control. When we analyze AI from a distance, we remain in familiar territory. We can evaluate risks, compare options, and imagine outcomes without putting ourselves on the line. Using AI, by contrast, introduces exposure. The output might be wrong. The interaction might feel awkward. We might not know how to respond. These moments challenge our sense of competence, especially in environments where expertise is valued. Overthinking becomes a way to protect identity. As long as we are still “learning about AI,” we cannot be judged on how well we use it. The problem is that this protection comes at a price. We trade short-term comfort for long-term capability.

🔖Can Gemini and Slides match this level? ⚡

I read this workflow about ChatGPT generating presentations. It raised a question. Can we replicate this exactly with Gemini and Google Slides today? I need to know if Gemini can already analyze, structure, and generate the final file (or connect to design) without the manual friction. I’m looking for real efficiency. Not theory. If anyone has a proven workflow, I’d appreciate the insight.

Your Favourite AI Productivity hacks

Hello 👋 I would love to hear what AI tools you’re using to streamline business processes and daily tasks (like inbox management). Our team is getting busier with exciting plans to execute this year 🙌 and we would benefit from leveraging AI to save us time (and energy). What are your recommendations please?

0

0

1-30 of 10,847

skool.com/the-ai-advantage

Founded by Tony Robbins, Dean Graziosi & Igor Pogany - AI Advantage is your go-to hub to simplify AI and confidently unlock real & repeatable results

Powered by