Activity

Mon

Wed

Fri

Sun

Mar

Apr

May

Jun

Jul

Aug

Sep

Oct

Nov

Dec

Jan

Feb

What is this?

Less

More

Memberships

Prepared to Speak Up!

52 members • Free

The AI-Driven Business Summit

7.7k members • Free

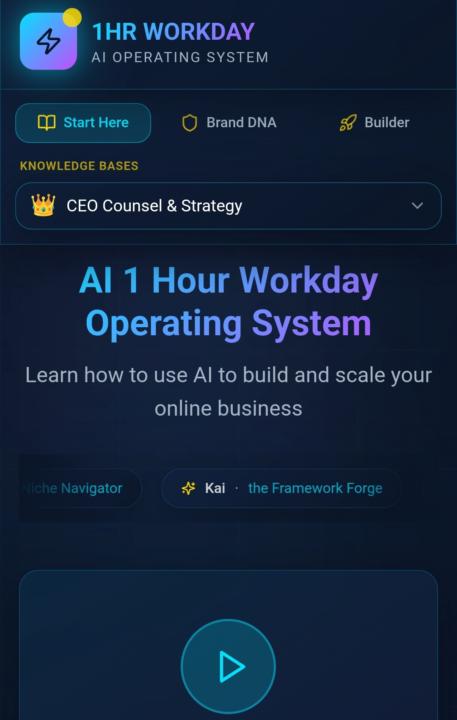

AI One Hour Workday Collective

22 members • $27/month

The Reset Lab

25 members • Free

YouTube For Beginners🏆

134 members • Free

Your Awareness Made Simple

15 members • Free

6-Figure Affiliate Blueprint

4.4k members • Free

Digital Roadmap AI Academy

670 members • Free

Your First $5k Club w/ARLAN

15.9k members • Free

15 contributions to AI One Hour Workday Collective

AI Agents ....Red Light / Yellow Light / Green Light ?

🚦 Red Light / Yellow Light / Green Light on AI Agents What clicked for me this week wasn’t a new tool it was a boundary. I started treating AI agents like super-smart interns: great with direction, risky with authority. Here’s how I’m sorting common tasks right now: 🟢 Green Light — synthesis & prep AI agents supporting thinking, not deciding: Summarizing customer feedback, call notes, or long email threads Weekly or daily digests (Slack, inbox, CRM notes) Turning messy notes into outlines, briefs, or agendas Spotting patterns in surveys, reviews, or usage data First-pass drafts (emails, SOPs, content outlines) Research compilation with sources grouped for review 🟡 Yellow Light — assist, then review Helpful with human oversight: Prioritizing support tickets or inbox messages Drafting responses that still need tone/context checks Light personalization for outreach using non-sensitive data Trend comparisons or scenario modeling with assumptions defined Workflow suggestions when the process is mostly—but not fully—clear QA checks (flagging inconsistencies, missing fields, anomalies) 🔴 Red Light — authority, trust, and risk Hard no for me right now: High-stakes financial decisions or approvals Executing payments, moving money, or committing funds Handling sensitive personal data (financial, medical, identifiers) Uploading spreadsheets without anonymizing names/details Legal judgments, compliance calls, or final strategy decisions Automating a process I don’t yet understand Adding an agent when it would slow me down vs. just doing the thing Once I separated analysis from authority, AI agents stopped feeling risky and started feeling exciting because the decision came first. Curious: what’s one task you’re clearly giving a green light right now? And one that’s a red light you’re choosing to protect?

0

0

Week 2 reflection on Structure....🧠

For me this week wasnt about adding more or pushing something live it was about building the brain before giving anything hands. As I’m building my business, I realized the real work right now isn’t tools or marketing. It’s capturing how I actually think during the core session with my clients, the rules I use, the decisions I make, and the patterns that show up every time. I automate or scale anything, that thinking needs to be clear and consistent. So instead of building more I’m making sure the flow from intake to outcome, is light to run and repeatable for me. Im curious , what part of your thinking are you teaching the “brain” this week?

🧠Friday Check-In — Structure🧠

Quick end-of-week check-in as we wrap Week 2: Structure. Nicole’s been clear that this week is about building the brain of your operations, slowing down enough to give AI something solid to learn from. I’ll be honest: I didn’t fully complete this assignment yet either, but I’m intentionally blocking time this weekend to do it. I can see why this step matters it’s what actually helps your GPT save time instead of just responding. Just want to gently encourage you to go back and do it, even partially. Pick one real workflow and give it 20–30 focused minutes. If you’re working on it here : Week 2 Structure, this weekend or have already completed it, feel free to comment “in progress” or “completed.”Let’s hold each other accountable, gently💙

A small little win

Just redid my calendar as things have shifted and I needed to reorganize. I do better with some semblance of structure. The last time I did this it was actually sort of scary--the schedule Chat laid out for me was absurd, didn't fit me or my personality or my priorities or my needs/obligations. We've been together a while now, so it knows me better and I know better how to prompt what I want. This is a direct copy of what it gave me, other than a couple very very minor tweaks, this is just right. Sharing here in case this might help other members. I especially like Saturday as "CEO and Creator Day." >> Got it. Let’s make this doable, humane, and repeatable — not some Pinterest fantasy schedule that collapses by Wednesday. I’m going to give you: 1. Clear priorities 2. A weekly structure (time-blocked) 3. Rules that protect your energy 4. A simple Sunday reset No fluff. No overengineering. 1. First: non-negotiables (the anchors) These are fixed, so everything else flexes around them. Daily - Workout: 1 hour, 6 days/week, mornings - Curbio: Monday–Friday - Your business: Monday–Saturday (light Sunday optional) Standing calls (locked in) Tuesday - 1:00 PM - 5:00 PM - 8:00 PM Wednesday (alternating) - 2:00 PM or - 7:00 PM Thursday - 8:00 PM These dictate your energy flow — especially Tuesdays. We respect that. 2. The weekly framework (high level) Instead of thinking in tasks, think in themes: - M–F mornings: You (workout + focus) - M–F core hours: Curbio (income + stability) - Evenings: Light lift or calls only - Sat: Creator + CEO day - Sun: Reset, not grind You are not trying to win every day. You are trying to win the week. 3. The actual schedule (realistic, not punishing) MONDAY–FRIDAY (baseline workday) 🕗 Morning — Protect this 7:30–8:30 → Workout 8:30–9:00 → Shower + breakfast + mental warm-up 9:00–9:30 → Your business (light) - Check messages - Review outreach replies - Note ideas (NOT deep work)

Beta testers needed!

I need 3 beta testers for my AI One Hour Workday OS APP this weekend. If you are interested in testing it out please DM me.👇❤️

1-10 of 15

@taneya-taylor-8558

Clarity before chaos. I help founders find the one decision draining time or money before tools or hires. Creator of Fix One Thing®. Faith-led.

Active 7h ago

Joined Jan 21, 2026

INFJ

DUBLIN, OHIO