Activity

Mon

Wed

Fri

Sun

Mar

Apr

May

Jun

Jul

Aug

Sep

Oct

Nov

Dec

Jan

Feb

What is this?

Less

More

Memberships

The AI Advantage

73.6k members • Free

AgentVoice

575 members • Free

Early AI-Starters

176 members • Free

Voice AI Accelerator

7.3k members • Free

OS Architect

11k members • Free

Vertical AI Builders

9.9k members • Free

Brendan's AI Community

23.5k members • Free

AI Automation Agency Hub

292.9k members • Free

2 contributions to The AI Advantage

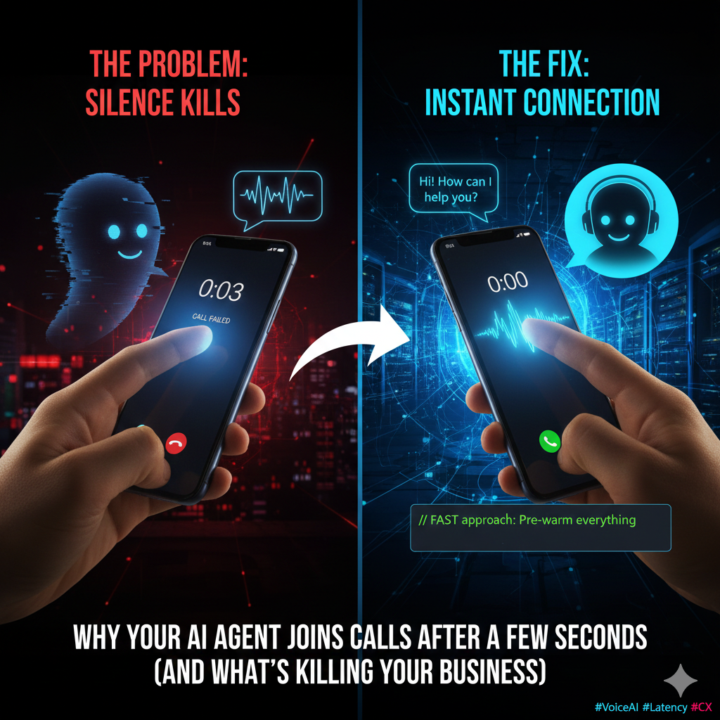

Why 40% of my AI agent calls were getting hung up on

Last week I figured out why our AI receptionist had a 58% connection rate even though it was working perfectly. People were hanging up before it even said hello. Here's what was happening: Call comes in → Initialize AI session → Load customer data → Connect voice → Generate greeting → Finally speak Total time? 2.8 seconds of dead silence. Turns out people hang up after 2-3 seconds if nobody answers. And if they say "Hello?" twice into the void, they're gone. I was so focused on making the AI smart that I forgot about the first 3 seconds. The fix was stupid simple: Instead of doing everything AFTER the call connects, I started doing it BEFORE: - Keep AI sessions pre-warmed in a pool (ready to go) - Pre-generate common greetings as audio files - Play greeting immediately, load customer context in background __________________________________________________________________ // Old way: 2800ms await initAI() → await loadContext() → await speak() // New way: 150ms playPrerecordedGreeting() + loadContextInBackground() __________________________________________________________________ Answer time went from 2.8 seconds to 0.2 seconds. Connection rate jumped from 58% to 94%. Same AI. Same quality. Just answered faster. One client was losing $47k/month from people hanging up on silence. Fixed it with this and saved them $38k monthly. Lesson: Your AI can be perfect but if it doesn't pick up fast, nobody will ever know. If you're building voice AI and seeing high abandonment rates, check your answer time first. Most people are optimizing the wrong thing.

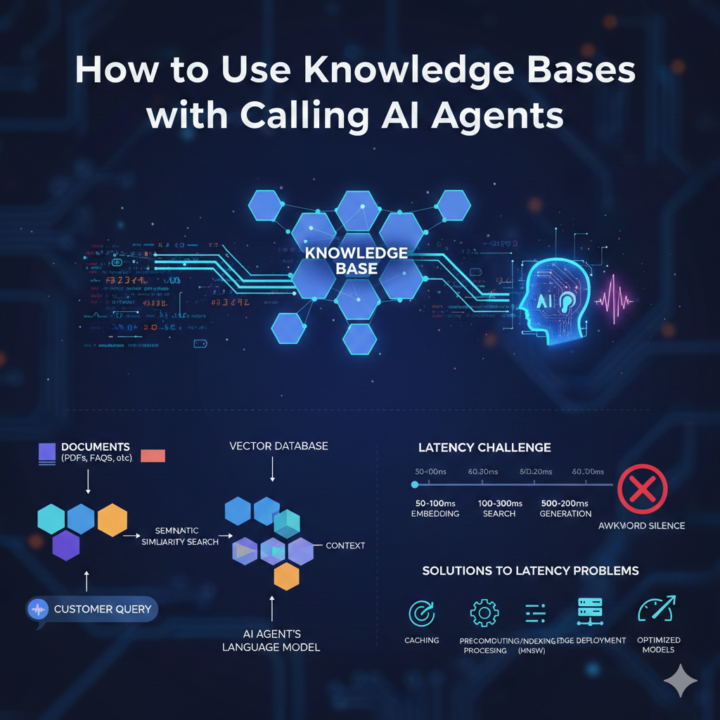

Hooking up knowledge bases to voice AI agents

So I've been building these calling AI agents that need to pull info from company docs during live calls. Turns out it's way trickier than I thought. Here's what happens behind the scenes: You take all your docs, FAQs, policies, whatever, and chop them into small chunks. Then you run them through an embedding model that turns text into numbers (vectors). These get stored in a vector database like Pinecone or Weaviate. When someone calls and asks a question, the agent converts their question into the same vector format and searches for similar chunks. Grabs the top 3-5 matches, feeds them to the AI, and boom, you get an answer. The problem? Speed. People expect instant responses on calls. But all this searching and retrieving takes time. I was seeing 2-3 second delays which feels like forever on a phone call. What actually worked for me: 1. Cache everything. Common questions get stored so you skip the whole search process 2. Keep chunks small but overlap them a bit so you don't lose context 3. Use faster algorithms for searching (HNSW is solid) 4. Don't wait for everything. Start generating the answer as soon as you get the first chunk. I also created a "hot cache" of the top 50 most asked things. Keeps it in memory. Crazy fast. The latency went from 2+ seconds down to under 600ms for most queries. Still not perfect but way more natural. If you're building something similar, my advice: start simple. Get it working first, then optimize. And measure everything because you'll be surprised where the slowdowns actually are.

1-2 of 2

Active 17h ago

Joined Jan 28, 2026

Powered by