Activity

Mon

Wed

Fri

Sun

Mar

Apr

May

Jun

Jul

Aug

Sep

Oct

Nov

Dec

Jan

Feb

What is this?

Less

More

Memberships

OpenClaw Builders

98 members • Free

3 contributions to OpenClaw Academy

WHICH MAC MINI?

Hey everyone! I was thinking of getting a mac mini...should i get the SSD 256gb or 512gb? I need a pc to run my OpenClaw locally here. I need it to have more tools, be more powerful and see what its doing so I am happy to make this investment. What are your suggestions? thank you

Newbie intro: Setting up OpenClaw on a fresh Mac Mini

Hi everyone! 👋 I’m retired and excited to spend some of my free time diving into the world of OpenClaw. I’ve got a spare gaming PC (after my son reluctantly abandoned it to go to college) and I'm looking to learn how to set everything up from scratch. Looking forward to learning from this community and getting my agent up and running! Thanks in advance for all the guidance.

1 like • 4h

@Dominic Damoah thank you for the sessions. although. i only catch bits and pieces here and there -- i think there are so much technical details into it that youtube videos does not talk about. you are the expert!! i may consider installing it in a mac and learn some vibecoding along the way

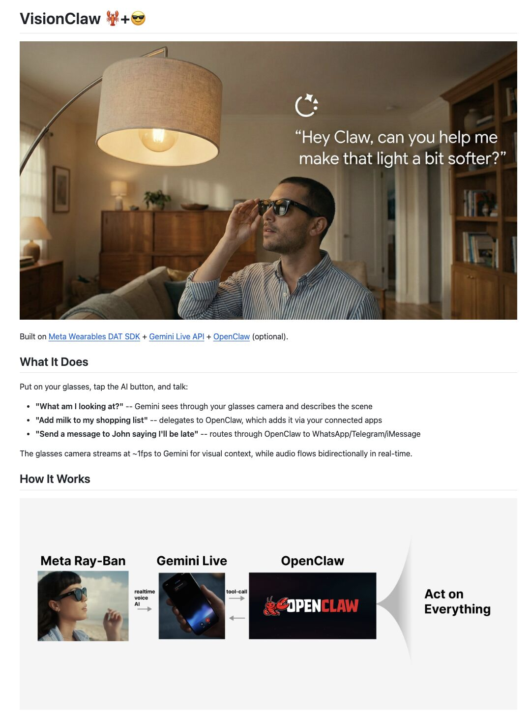

VisionClaw nuts!

Wait... someone just open-sourced a real-time OpenClaw agent 🤯 It sees what you see. Hears what you say. Acts on your behalf. All through your voice. From your Meta glasses or just your iPhone. It's called VisionClaw. And it uses OpenClaw and Gemini Live. Put on your glasses or open the app on your phone, tap one button, and just talk. "What am I looking at?" and Gemini sees through your camera and describes the scene in real time. "Add milk to my shopping list" and it actually adds it through your connected apps. "Send John a message saying I'll be late" and it routes through WhatsApp, Telegram, or iMessage. "Find the best coffee shops nearby" and it searches the web and speaks the results back to you. All happening live. While you're walking around. Here's what's running under the hood: • Camera streams video at ~1fps to Gemini Live API • Audio flows bidirectionally in real time over WebSocket • Gemini processes vision + voice natively (not speech-to-text first) • OpenClaw gateway gives it access to 56+ skills and connected apps • Tool calls execute and results are spoken back to you Your camera captures video. Gemini Live processes what it sees and hears over WebSocket, decides if action is needed, routes tool calls through OpenClaw, and speaks the result back. No Meta glasses? No problem. Your iPhone camera works just the same. Clone the repo, add your Gemini API key, build in Xcode, and you're running. 100% open source. Built with Gemini Live API + OpenClaw.

1-3 of 3

@new-life-8602

just retired and want to explore and improve my knowledge using AI. i have no programming experience except for Fortran back in 1980s. lol

Active 3h ago

Joined Feb 8, 2026

Penang

Powered by