Activity

Mon

Wed

Fri

Sun

Mar

Apr

May

Jun

Jul

Aug

Sep

Oct

Nov

Dec

Jan

Feb

What is this?

Less

More

Memberships

OpenClaw Academy

329 members • Free

Blueprint For Bitcoin (B4B)

44 members • $59/month

Remote Sales Academy

339 members • Free

Social Media Monetisation

2.4k members • Free

Bullrun Millions Crypto Course

1.4k members • $999/m

3 contributions to OpenClaw Academy

OpenClaw Trust Center

Amazing Resource: Guys read up on OpenClaw Security: https://trust.openclaw.ai/trust

1 like • 19h

Thanks, that makes sense. At this stage I’m experimenting and learning the Clawbot process properly before committing to bigger hardware. My focus right now is understanding training, logging, and building one solid use case first. Once I’m confident it’s producing real value consistently, then I’ll look at upgrading the machine if needed. Appreciate you sharing your experience.

VisionClaw nuts!

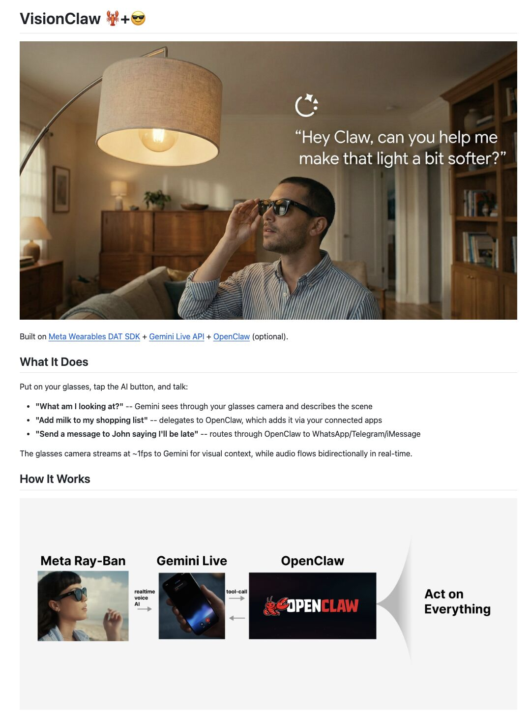

Wait... someone just open-sourced a real-time OpenClaw agent 🤯 It sees what you see. Hears what you say. Acts on your behalf. All through your voice. From your Meta glasses or just your iPhone. It's called VisionClaw. And it uses OpenClaw and Gemini Live. Put on your glasses or open the app on your phone, tap one button, and just talk. "What am I looking at?" and Gemini sees through your camera and describes the scene in real time. "Add milk to my shopping list" and it actually adds it through your connected apps. "Send John a message saying I'll be late" and it routes through WhatsApp, Telegram, or iMessage. "Find the best coffee shops nearby" and it searches the web and speaks the results back to you. All happening live. While you're walking around. Here's what's running under the hood: • Camera streams video at ~1fps to Gemini Live API • Audio flows bidirectionally in real time over WebSocket • Gemini processes vision + voice natively (not speech-to-text first) • OpenClaw gateway gives it access to 56+ skills and connected apps • Tool calls execute and results are spoken back to you Your camera captures video. Gemini Live processes what it sees and hears over WebSocket, decides if action is needed, routes tool calls through OpenClaw, and speaks the result back. No Meta glasses? No problem. Your iPhone camera works just the same. Clone the repo, add your Gemini API key, build in Xcode, and you're running. 100% open source. Built with Gemini Live API + OpenClaw.

Which channel do you use most for Clawdbot/Moltbot?

Let me know which channel you guys use or plan to use?

Poll

35 members have voted

1-3 of 3

Active 9h ago

Joined Feb 13, 2026

United Kingdom

Powered by