Activity

Mon

Wed

Fri

Sun

Mar

Apr

May

Jun

Jul

Aug

Sep

Oct

Nov

Dec

Jan

Feb

What is this?

Less

More

Memberships

No-Code Nation

3.2k members • Free

AI Workshop Lite

14.8k members • Free

AI Cyber Value Creators

8.1k members • Free

Voice AI Academy

1k members • Free

Voice AI HQ

370 members • Free

Voice AI Accelerator

7.3k members • Free

Brendan's AI Community

23.5k members • Free

AI Sales Agency Launchpad

13.9k members • Free

CM

Content Mastery

8.3k members • Free

4 contributions to Voice AI HQ

Chunking

Hello all, I have a question, what is the best method to chunk large, complex PDFs to feed them into a RAG? Note: i already extracted raw text in a mark down format using "dockling".

My objective is to implement real life projects

I did some experiments with AI voice agents using the GHL platform and other experiments using Retell AI. Even though I created AI voice agents that work, somehow I never felt confident to use them in real life projects. Anyone else experience something similar? Example project: AI voice agent that answers incoming calls, interact with callers and schedule appointments. My objective is to transition from experimenting to implementing real life projects.

Are there any ways to interrupt Vapi's silence?

I have been working with automation for quite some time and recently started creating voice agents on Vapi and Retell. Overall, everything is going well, but I often encounter situations where Vapi's conversation simply stops for some reason (sometimes the transcriber cannot identify what the user said, nothing is sent to the model, or the websocket breaks). For this reason, I am increasingly interested in delving deeper into the development of such agents using hardcode, and here are the questions I am interested in. Please let me know if you have any answers: 1. Can I use hardcode to identify that there is silence on the line for, say, 10 seconds, and force the LLM to generate an engaging message and play it back to bring the user back into the dialogue? 2. I am interested in DSPy technology and wonder if it can be implemented for voice agents on Vapi? So far, I haven't written a single assistant in code and am just getting ready to seriously start working on it, so there are many things I don't understand yet.

1 like • Sep '25

Found a solution: If the assistant speaks English, you can go to Messaging - Idle Messages. If in other languages, then via API request: curl -X PATCH https://api.vapi.ai/assistant/id \ -H "Authorization: Bearer token" \ -H "Content-Type: application/json" \ -d '{ "messagePlan": { "idleMessages": [ "insert-your-message-here-1", "insert-your-message-here-2" ], "idleMessageMaxSpokenCount": 3, "idleTimeoutSeconds": 8 } }'

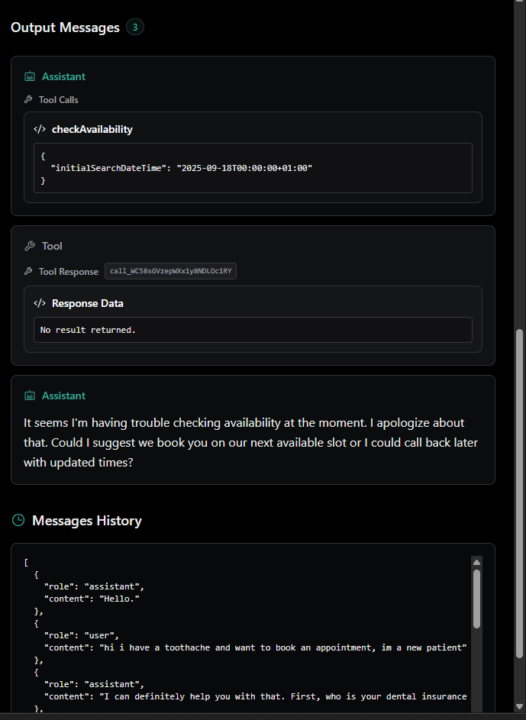

Vapi Tool response help

Any time i try to talk to the agent, the tool response/response data is always "No result returned.", i have tried using my n8n webhook test and webhook production url for my tools server url in vapi but neither work.

0 likes • Sep '25

You must pass the response to the tool via the Result variable. { "toolCallId": "VAPI_TOOL_ID", "result": "{\"availableSlots\":[{\"humanReadable\":\"Friday, September 19 at 9:00 AM\",\"isoDateTime\":\"2025-09-19T09:00:00-04:00\"},{\"humanReadable\":\"Friday, September 19 at 9:30 AM\",\"isoDateTime\":\"2025-09-19T09:30:00-04:00\"},{\"humanReadable\":\"Friday, September 19 at 10:00 AM\",\"isoDateTime\":\"2025-09-19T10:00:00-04:00\"},{\"humanReadable\":\"Friday, September 19 at 10:30 AM\",\"isoDateTime\":\"2025-09-19T10:30:00-04:00\"},{\"humanReadable\":\"Friday, September 19 at 11:00 AM\",\"isoDateTime\":\"2025-09-19T11:00:00-04:00\"},{\"humanReadable\":\"Friday, September 19 at 11:30 AM\",\"isoDateTime\":\"2025-09-19T11:30:00-04:00\"},{\"humanReadable\":\"Friday, September 19 at 12:00 PM\",\"isoDateTime\":\"2025-09-19T12:00:00-04:00\"}]}" }

1-4 of 4

Active 84d ago

Joined Sep 16, 2025