Activity

Mon

Wed

Fri

Sun

Mar

Apr

May

Jun

Jul

Aug

Sep

Oct

Nov

Dec

Jan

Feb

What is this?

Less

More

Memberships

Women Build AI

1.6k members • Free

The AI-Driven Business Summit

7.4k members • Free

SHE IS AI Community

99 members • $19/m

AI-Smart CEOs with Wendy

2.4k members • Free

Ai Filmmaking

8k members • $5/month

35 contributions to SHE IS AI Community

Building the next generation AI workforce

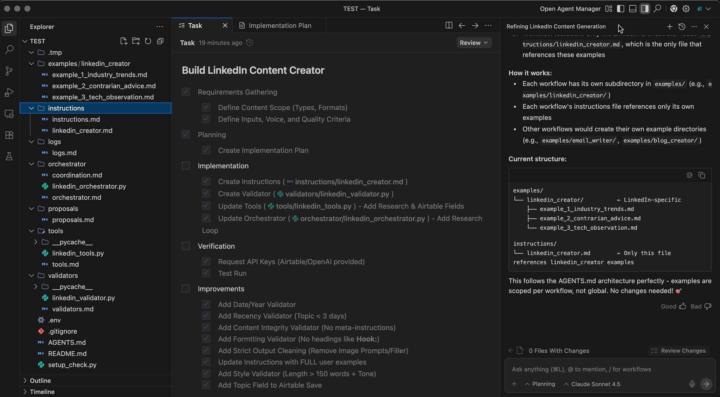

I know that most people use Antigravity to build apps and websites but I've been testing something different. Using code to build AI employees. And I built it in Aintigravity but my systrm is platform agnostic, so I can do it in ClaudeCode or Cursor or any other IDE that conencts to a coding assistant. Here's exactly how I did it (and how long it took): 1. I asked Claude to design a complete blueprint for building AI employees - a 2,590-line instruction file called AGENTS .md. Think of it as the DNA for my AI workforce. 2. I uploaded it to Google's Antigravity and gave it one simple prompt: "Setup the infrastructure using AGENTS .md" 3. In less than 10 minutes, my entire infrastructure was built without me touching anything. I literally sat at my desk watching YouTube videos while the AI did everything. 4. I gave it another prompt: "Build a LinkedIn content creator" - that's it. 5. Now the AI took over completely. I just sat there babysitting and answering questions like "What should the LinkedIn creator do?" or "Do you have examples?" I also provided API keys when it asked. 6. 15 minutes later, my LinkedIn content creator was done. 7. I started testing and fine-tuning using plain English - no code, just conversations. 8. Spent about an hour testing and refining until it worked the way I wanted (yes it definitely needs way more stress testing sn fine tuning but as an MVP it was ready) 9. Here's where it gets wild: The AI logs every run, and after about 20 attempts, it analyzes the patterns and suggests improvements to its own instructions based on what worked and what didn't. 10. When those recommendations come in, I review them. If I approve, it rewrites its own instructions. I don't have to do anything else - it literally makes itself better over time. And now that the infrastructure is set up, If I want to build another AI employee, I just tell the AI what I want in plain English.

🌟 New Series: The Weekly Spotlight is ON

🔥AKA: The ENGAGEMENT RELAY 🏁 Every week starting now, we’re handing the mic to one amazing community member to lead the charge inside Skool for 7 days. Here’s how it works: 🎤 YOU get the spotlight • Your own featured post pinned at the top for the week • Space to post min of 5 resources, tips, tools, workflows, wins, or prompts • The community gets to engage, ask, learn, and connect with you all week • Then your week gets archived in the Classroom Vault as a legacy of your brilliance! 📣 WE amplify you • I’ll share your bio + highlights and content on our socials • • You’ll inspire and help others while growing your visibility in the AI space ⚡ Bonus points if you: → Go live for a mini demo or AMA → Drop a poll or challenge → Share a behind-the-scenes or workflow Then we pass the baton to the next person! 🧠 Want in? Drop your name and week and ideas here ➡️ 📅 Choose your week (or I’ll lovingly assign one) Because I want to hear from ALL of you! I know life gets full, but if you’re still here, it’s because this matters to you. Let’s rise together again, one week, one voice, one ripple at a time. Who's in! 👇

Nano banana prompt guide

https://www.patreon.com/posts/nano-banana-147850779?utm_medium=clipboard_copy&utm_source=copyLink&utm_campaign=postshare_creator&utm_content=join_link

Building Credibility in an AI-Swamped World

Why more AI doesn’t automatically mean more trust We are living through the peak of the AI hype cycle. Trillions of dollars are being poured into infrastructure, tools, and promises of productivity. But beneath the optimism sits a quieter problem: credibility erosion. More AI-generated information doesn’t automatically lead to better outcomes. In many cases, it does the opposite. This article is a curated and abridged reflection on a powerful talk by Eva Digital Trust, exploring how generative AI, when used carelessly, can quietly undermine trust, expertise, and brand credibility — and what to do instead. 👉 Watch Eva’s original presentation here: https://www.evadigitaltrust.com/speaking?utm_source=substack&utm_medium=email#h.sj3r0zwe6ytz 1. AI hype doesn’t equal value AI investment numbers are staggering, but hype alone doesn’t deliver ROI. When use cases are vague and productivity gains don’t materialise, pressure builds — especially on leaders — to prove AI is “working.” The problem isn’t AI itself. It’s deploying it without clarity, strategy, or accountability. 2. Hallucinations are a feature, not a bug Large language models don’t “know” facts; they predict patterns. That means hallucinations are inherent to how they work. The danger is subtle: outputs often sound confident, structured, and professional, while quietly being wrong, irrelevant, or misaligned with context, regulation, or real constraints. This leads to what’s now called “workslop”; polished-looking content that creates more rework, more risk, and more cost. You can’t slop your way to a credible strategy, product, or point of view. 3. Visible AI use can trigger bias Research shows that openly disclosing AI use can lower perceptions of competence — particularly for women, older workers, neurodivergent professionals, and people writing in a second language. AI may aim for neutrality. Humans do not. This means credibility isn’t just about whether you use AI, but how visibly and how thoughtfully you use it.

🚨SHE IS AI Supports Ellen Craven and Cincy Women in AI! 🎉

This is awesome and such a powerful way to kick off 2026. 🙌 Our incredible ambassador @Ellen Craven is launching a Cincy Women in AI sub-group as part of CincyAI for Humans (a community of 2,000+!). 💥 ✨ This new group will be sponsored by SHE IS AI and guided by the mission we all share; to build confidence, clarity, and leadership in the age of AI with heart, humanity, and real community. I’m so excited for Ellen, and grateful to have her representing us in this way and leading the charge in Cinci. Her vision, creativity, and years of brand + innovation experience will bring incredible value to the group and the network. We’ll be supporting this group with resources, mentorship, visibility, and connection and whatever else we can do, and we’re so excited to see more local ecosystems like this forming around the world 🌍 Let’s go Ellen! Let’s go Cincinnati! Let’s go SHE IS AI! Amanda 💛

1-10 of 35

@christine-simonson-2420

Founder of Wild Thistle Moon. Building Disruptor Copy, a hook-and-caption tool for content. Here to share what I’m testing and learning in real time.

Active 2d ago

Joined Sep 26, 2025

Texas

Powered by