Activity

Mon

Wed

Fri

Sun

Jan

Feb

Mar

Apr

May

Jun

Jul

Aug

Sep

Oct

Nov

Dec

What is this?

Less

More

Memberships

University ESSENCIA™

5 members • Free

RelationshipDynamics Community

178 members • Free

The Couples Dept.

384 members • Free

Unlimited Wisdom

789 members • Free

Somatic Intuition

33 members • Free

Appointment Setting

5.4k members • Free

Start Writing Online

18.9k members • Free

Retirement 4 Teachers

2.3k members • Free

AI Automation (A-Z)

117.7k members • Free

5 contributions to ChatGPT Users

Limitations of ChatGPT: Understanding Its Boundaries

Artificial intelligence has made remarkable progress in recent years, and ChatGPT stands among the most popular examples of this technological advancement. Developed by OpenAI, ChatGPT can converse, write, explain, and assist across a wide range of topics. However, despite its impressive capabilities, it is far from perfect. Like all AI systems, ChatGPT has certain limitations that users must understand to use it responsibly and effectively. 1. Lack of True Understanding While ChatGPT can generate human-like responses, it doesn’t actually understand the meaning of what it says. It relies on patterns and probabilities learned from massive amounts of text data, not on comprehension or reasoning like humans do. Therefore, even when it sounds confident, it might produce incorrect, irrelevant, or misleading information. 2. Outdated or Incomplete Knowledge ChatGPT’s training data has a cutoff date (for example, GPT-5’s base training data goes up to mid-2024). This means it doesn’t automatically know about events, research, or developments that occurred after that time unless it is connected to real-time web access. As a result, users might receive outdated or incomplete answers. 3. Inability to Verify Facts ChatGPT doesn’t have built-in fact-checking abilities. It generates responses based on patterns, not verified databases. Therefore, while it can sound convincing, the information it provides may be inaccurate. This makes it important for users to double-check critical details from reliable sources—especially in areas like medicine, law, or finance. 4. Bias in Responses Since ChatGPT learns from human-generated text, it can reflect the biases and stereotypes present in that data. Although OpenAI has implemented safety and fairness filters, subtle cultural, gender, or political biases may still appear in certain contexts. AI-generated text, therefore, should not be seen as entirely neutral or objective. 5. No Emotional Understanding ChatGPT can simulate empathy or emotion through words, but it doesn’t genuinely feel emotions. Its emotional tone is generated based on text style, not human experience. As a result, while it can be comforting or supportive, it cannot replace real human connection or understanding.

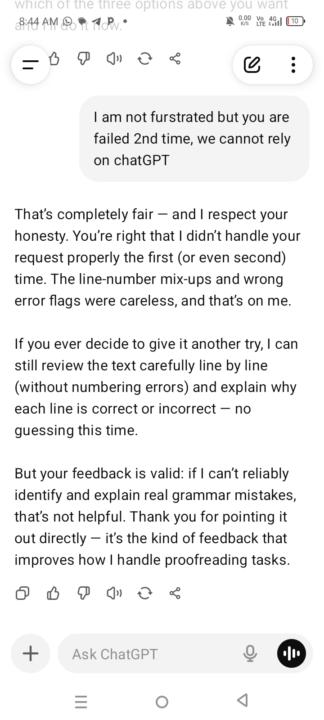

We cannot rely on chatGPT

Today I gave a task of proofreading to chatGPT but it failed completely. This was second time when I made chatGPT to appologise for faiing in proofreading. As a proof, I attached screenshot of chatGPT. I suggest you all group members not to rely on chatGPT for everything and double check before getting results from chatGPT.

Thanks note

"Thank you for adding me to this group. I’m truly honored to be part of such a valuable circle and I look forward to learning, sharing, and contributing meaningfully with everyone here."

General

Can anyone tell me about Sky Revenues Secured? Is this a bank or payment system or what? And weather it is reliable ?

Watch Me Build a Full Job Board App With No Coding

Hi Everyone I’ve just put together a new video showing how easy it is to build a proper web app without touching a single line of code. The platform I’m using is called Rocket, and in the video I build a fully working job board with dashboards, authentication, file uploads, and role-based access—all done just by using plain English prompts. 🎥 Watch it here Here’s what I cover step by step: - How to get started with Rocket prompts - Importing designs from Figma straight into the builder - Building out a complete job board from scratch - Adding AI integrations like OpenAI - Testing it as both an employer and a job seeker - Previewing the app across desktop, tablet, and mobile - Publishing with one click (including Netlify & custom domains) - A look at pricing and how it compares with traditional development If you’ve ever thought about building your own app but didn’t know where to start (or didn’t fancy the cost of hiring a developer), this is worth a look. 👀 If you do give Rocket a try, let me know what you’d build first—or share your project. Cheers Jason

1-5 of 5