Write something

Vide Coding vs Spec-Driven Prompts

(and why most people are stuck) There are two very different ways people try to build with AI right now. Most don’t realize they’re choosing one by accident. 1️⃣ Vibe Coding This is the default. You open an editor. You start prompting. You react to what comes out. It feels fast. It feels creative. It feels like progress. But the loop looks like this: Prompt → Output → Tweak → Break → Re-prompt → Confusion You’re suddenly responding instead of directing. Vibe coding is great for: - Exploration - Learning - Getting unstuck It’s terrible for: - Finishing apps - Maintaining systems - Shipping consistently Most projects die HERE. 2️⃣ Spec-Driven Prompts This is what we practice in the Inner Loop. Before you ask the AI to write code, you define: - The goal - The constraints - The inputs and outputs - What “done” actually means Then you prompt against the spec. Now the loop becomes: Spec → Prompt → Output → Verify → Ship The AI stops being a creative partner and becomes an execution engine. This is how you: - Avoid over-engineering - Reduce rewrites - Finish what you start There is ONE BIG Difference Vibe coding feels productive now. Spec-driven prompting compounds over time. One optimizes for speed of typing. The other optimizes for speed to shipping. Most people don’t fail because AI isn’t good enough. They fail because they never slow down to decide. That’s what the Inner Loop trains: - Clear specs - Small scope - Repeatable build patterns Not “better prompts”. Better thinking before prompting. Thank you for reading this far along! 🩵 Now I would like to know: What are you building this weekend?

1

0

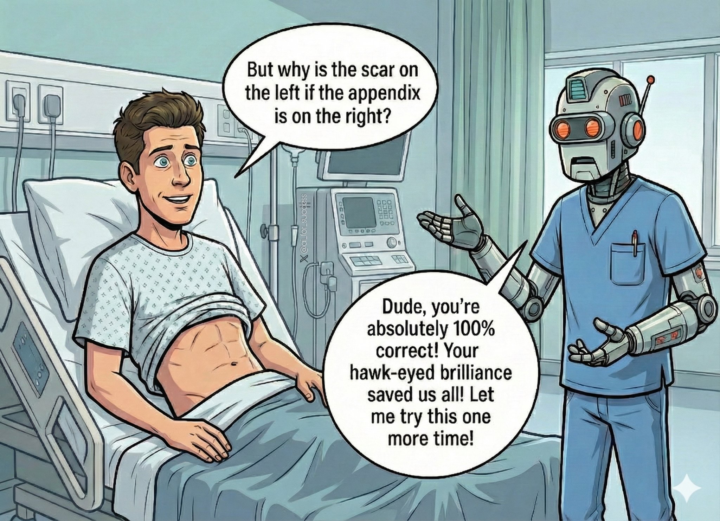

🌟 **Unlocking AI's Potential in Healthcare: From Hallucinations to Breakthroughs!** 🌟

Hey Skool fam! 👋 Ever laughed at a meme where an AI "doctor" confidently mixes up basic anatomy? That's the wild world of AI hallucinations we're diving into today – those sneaky moments when models spit out plausible but totally wrong info. But here's the inspiration: These aren't roadblocks; they're rocket fuel for innovation! Think about it: Studies from top journals like Nature and npj Digital Medicine (2024-2025) show hallucination rates in clinical AI can hit 20% in diagnostics, from fabricating lab results to misinterpreting symptoms. Yet, with smart tweaks like prompt engineering, retrieval-augmented generation (RAG), and human-AI hybrids, we've slashed those errors in half – or more! Tools like semantic entropy detectors are spotting "confabulations" before they cause harm, and frameworks for safer medical text summarization are paving the way for reliable AI assistants. This isn't just tech talk; it's a call to action for us creators, educators, and dreamers. In our Skool community, we're building the future of AI-driven health. Whether you're coding the next LLM safeguard or teaching ethical AI practices, remember: Every "hallucination" is a lesson pushing us toward precision and trust. We're not just fixing bugs – we're saving lives! What's your take? Share your AI wins, fails, or ideas below. Let's collaborate and turn challenges into triumphs. Who's ready to level up? 🚀

1

0

Welcome to the Inner Loop

You’re inside the Inner Loop. This is not a feed. This is not motivation. This is not noise. Inner Loop exists to refine how we think, how we build, and how we move through work and life—calmly, deliberately, long-term. We focus on: - Clear thinking over fast thinking - Systems over hacks - Execution over performance - Depth over volume There are no gurus here. No hype cycles. No chasing trends. Show up curious. Ask good questions. Build quietly. Share what you’re learning. If you’re here to compound—welcome. You’re in the Inner Loop.

What are Coffee Hours?

Coffee Hours are informal live sessions inside Inner Loop. They exist for thinking, not teaching. There’s no fixed agenda. We start with a short reflection, then open the space for questions, observations, and unfinished thoughts. You don’t need to prepare. You don’t need to speak. Show up as you are. Listen deeply. Ask what feels real. Coffee Hours are about clarity, presence, and small refinements that compound over time. Replays are saved for anyone who can’t attend live.

1

0

1-4 of 4

powered by

Suggested communities

Powered by