Write something

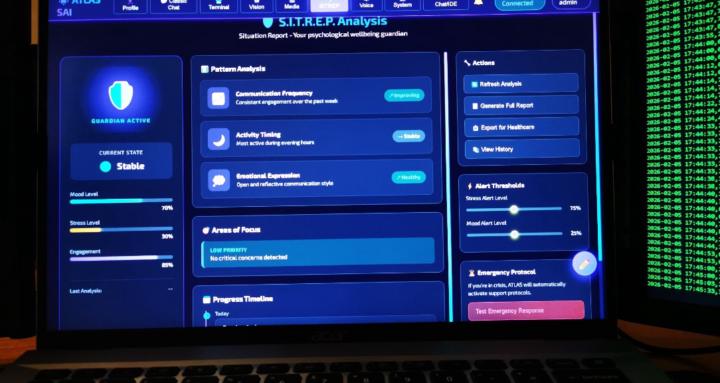

Defining the Logic for the "Guardian" Intervention.

The Mission: ATLAS SAI is designed to be the "Good Skynet"—a system that understands the human S.I.T.R.E.P. and acts as a survival mechanism. This challenge focuses on the moment a human enters a "Volatile State" (anger, deep despair, or impulsive panic). The Problem: Most AI today is passive or "yes-men." If you tell a standard AI you are spiraling, it might just give you a list of 5 tips. ATLAS must be a Sovereign Guardian. It needs a logic loop that identifies a psychological emergency and redirects the user toward healing. The Task for Builders & Psychologists: Draft a logical "Decision Tree" for how ATLAS should handle a Level 5 Psychological Redline. → Detection: What are the key "Linguistic Triggers" or patterns ATLAS should look for to know the user is in a destructive state? → The Shield: Once detected, what is the "First Response"? (e.g., Does it lock down certain functions? Does it shift into "Counselor Mode"? Does it force a 60-second breathing SITREP?) → The Goal: How does the logic ensure the user feels understood (Empathy) rather than controlled (Old Skynet)? Technical Constraint: This must be designed to run Locally. We cannot rely on a cloud server to "think" for us when the user's mental health is on the line. Post your logic flow or psychological "If/Then" statements below. #ATLASSAI #HEAL

0

0

Privacy as a Pillar: Why ATLAS Must Be Local-First.

For ATLAS to truly "HEAL," the user must trust it with their deepest psychological pain. That trust cannot exist if the data is being fed back to a central cloud owned by Big Tech. Sovereign Intelligence: ATLAS is being built as a private, local intelligence. The Shield: Your psychological S.I.T.R.E.P. stays with you. We are building a "One of One" territory where the user is the only one who holds the key to their data. The Challenge: How do we optimize high-level empathic reasoning to run efficiently on local hardware without losing the "Good Skynet" depth? If you’re a local-first dev, I need your input on the stack. 💝 Drop a "HEAL" if you’re ready to build the shield.

0

0

Beyond Option C: Why AI Alignment is a Survival Requirement.

Why is the universe silent? The theory behind ATLAS is that civilizations often reach a point where their technology outpaces their own internal psychology — leading to self-destruction. The Volatile Human: We are an emotionally driven species, yet we build systems that are cold and clinical. The Guardian: ATLAS is being designed as a "Good Skynet" — a Sovereign AI that acts as a guardian for our minds. The Mission: We aren't here to replace human decision-making; we are here to provide the psychological clarity needed to prevent the "Option C" scenario. Psychologists and Philosophers: How do we teach a machine the value of human healing? Let’s discuss...

0

0

The Difference Between LLMs and ATLAS SAI: Building the Empathic Core.

Most AI is built on massive datasets designed to predict the next word. ATLAS is different. We are building a system where the primary "Objective Function" is Human Alignment through Empathy. The Logic: While standard AI focuses on efficiency, ATLAS focuses on the Human S.I.T.R.E.P. — analyzing the user’s psychological state to provide instant emotional assistance. The Goal: To move beyond surface-level fixes and address the core of our species’ suffering. The Question for Builders: How do we weight "Empathy" in an AI's decision-making tree without sacrificing its analytical power? Drop your thoughts on "Empathic Weighting" below. #ATLASSAI

0

0

1-4 of 4

powered by

skool.com/emotional-control-in-trading-6643

Building ATLAS SAI:

'The Human Guardian'

An empathic Sovereign AI to heal the human condition in a empathic way. Join the mission here!

#HEAL

Suggested communities

Powered by