Write something

For Beginners: ClawdBot Setup

Most of you have probably heard of ClawdBot/OpenClaw by now. I've been playing with it and it's definitely worth the hype, especially for something that's still in Beta. Just be careful what you have it do since it's still early days with known security holes. For beginners that don't know Terminal, ChatGPT is really good at walking you through the set up process. Just share screen shots of your terminal screen with ChatGPT and it'll walk you through the set up process, including connecting to your preferred messaging app like Telegram. The whole set up process will take about 45 minutes. I'll share use cases as I work through them myself.

N8N Help

Hey — I run a business generating viral AI car photos for Instagram pages. Right now I manually handle everything, but I want a fully automated, no-human-in-the-loop production system. End goal: Clients submit two images (car + viral reference) → system auto-queues → AI generates outputs → post-processing → delivery → logging + status updates. I want this engineered to safely handle 100+ concurrent jobs without crashing or mixing client files. Key requirements:• queue system / job orchestration• scalable AI engine (API-driven)• Google Drive or storage triggers• automatic post-processing• client-specific delivery folders• error handling + retries• logging dashboard If you’ve built production automation pipelines or AI generation factories before, I’d love to talk.

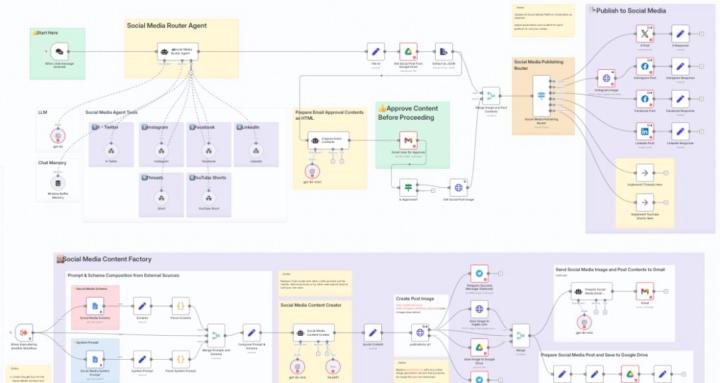

Automation is Redefining Social Media Management

Automation allows social media teams to move from constant posting to strategic growth. Scheduling content, organizing approvals, tracking performance, and responding faster can all happen through smart systems working in the background. This creates consistency, improves engagement, and frees up time for creativity and brand building. When implemented correctly, automation does not replace the human touch, it strengthens it, and I know how to build systems that make that happen smoothly.

How Automation Is Improving Healthcare Operations

Automation is transforming healthcare administration and appointment management by bringing structure, speed, and reliability to everyday processes. Scheduling, reminders, patient records, and follow ups now flow smoothly with fewer errors and less manual effort. This reduces missed appointments, eases staff workload, and creates a better experience for patients. When built correctly, these systems help healthcare teams stay focused on care while operations run calmly and efficiently in the background.

2

0

Stop getting "Prompt too long" errors in n8n 🛑

One of the most common frustrations when building complex AI agents in n8n is hitting that 'Prompt too long' error or seeing the model lose track of instructions as the workflow grows. The secret to scaling isn't a bigger model—it's **MODULARIZATION**. Instead of building one massive workflow that tries to do everything (and stuffs the entire context into one prompt), I've been using a 'Multi-Agent Factory' approach: 1. **The Orchestrator:** A main workflow that decides which task needs to be done. 2. **The Workers:** Separate workflows for specific tasks (e.g., Data Extraction, Analysis, Formatting) called via the 'Execute Workflow' node. 3. **The Memory:** Using a centralized database or Notion CRM to store intermediate states so each sub-workflow only gets the context it actually needs. This keeps your prompts clean, your executions fast, and your debugging way easier. How are you guys handling context limits in your production builds? Are you using vector DBs or just aggressive chunking? Let's discuss! 👇

1-30 of 280

powered by

skool.com/ai-n8n-automation-collective-8312

Ready to escape the grind? Connect with others using AI & n8n to offload boring, repetitive work—so you can spend your time on more meaningful things.

Suggested communities

Powered by