Activity

Mon

Wed

Fri

Sun

Mar

Apr

May

Jun

Jul

Aug

Sep

Oct

Nov

Dec

Jan

Feb

What is this?

Less

More

Memberships

Clap Academy Digital Community

451 members • Free

41 contributions to Clap Academy Digital Community

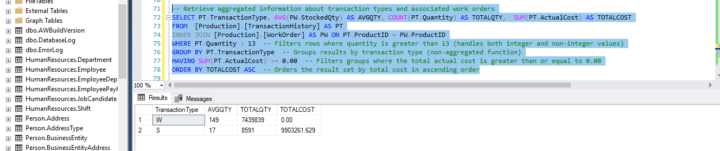

Order of SQL Query Execution

The sql query below is based on the Advertureworks2019 database we loaded into our database during SQL class. -- Retrieve aggregated information about transaction types and associated work orders SELECT PT.TransactionType, AVG(PW.StockedQty) AS AVGQTY, COUNT(PT.Quantity) AS TOTALQTY, SUM(PT.ActualCost) AS TOTALCOST FROM [Production].[TransactionHistory] AS PT INNER JOIN [Production].[WorkOrder] AS PW ON PT.ProductID = PW.ProductID WHERE PT.Quantity > 13 -- Filters rows where quantity is greater than 13 (handles both integer and non-integer values) GROUP BY PT.TransactionType -- Groups results by transaction type (non-aggregated function) HAVING SUM(PT.ActualCost) >= 0.00 -- Filters groups where the total actual cost is greater than or equal to 0.00 ORDER BY TOTALCOST ASC -- Orders the result set by total cost in ascending order Each query begins with finding the data that we need in a database, and then filtering that data down into something that can be processed and understood as quickly as possible. Because each part of the query is executed sequentially, it's important to understand the order of execution so that you know what results are accessible where. Query order of execution 1. FROM and JOINs The FROM clause, and subsequent JOINs are first executed to determine the total working set of data that is being queried. This includes subqueries in this clause and can cause temporary tables to be created under the hood containing all the columns and rows of the tables being joined. 2. WHERE Once we have the total working set of data, the first-pass WHERE constraints are applied to the individual rows, and rows that do not satisfy the constraint are discarded. Each of the constraints can only access columns directly from the tables requested in the FROM clause. Aliases in the SELECT part of the query are not accessible in most databases since they may include expressions dependent on parts of the query that have not yet executed. 3. GROUP BY The remaining rows after the WHERE constraints are applied are then grouped based on common values in the column specified in the GROUP BY clause. As a result of the grouping, there will only be as many rows as there are unique values in that column. Implicitly, this means that you should only need to use this when you have aggregate functions in your query.

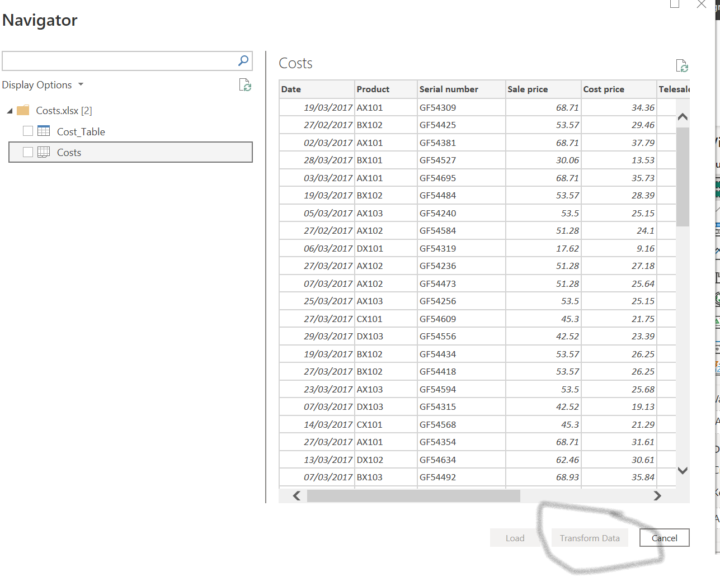

Get Data issue

Hello good people, I have a small challenge, I am practicing removing rows (cost file), and while trying to get data, i noticed the transform data or load isn't highlighted as an option. kindly advise how I can get this resolved. see attachment below thank you.

CRISP-DM (Cross-Industry Standard Process for Data Mining) methodology

From the perspective of data governance and expertise, the CRISP-DM (Cross-Industry Standard Process for Data Mining) methodology is a structured approach to solving complex data-related problems. It consists of several distinct phases, each serving a crucial role in the success of a data project. Let's delve into each step with a focus on data governance and expertise: 1- Business Understanding Phase: - In this initial phase, your role as a data expert is to engage with the customer to understand their problem clearly. It's essential to communicate without using technical jargon to ensure alignment. - Key questions to ask include:What is the specific date range you are interested in for this project?Are there any existing reports or data that relate to this request?It's an opportunity to validate if the project is scalable.Inquire about the existence of project documentation for any existing reports. This documentation is vital for understanding historical context. 2 - Data Understanding Phase: - Your data governance expertise comes into play here. Ask for details about the databases storing relevant data. - If the customer is uncertain, identify and engage with the database administrator responsible for the data source. 3 - Data Presentation Phase: - While this phase may not directly involve data governance, data experts must present their findings and insights clearly and promptly to stakeholders. 4- Data Modeling Phase: - As a data analyst, your role in this phase includes working within a developer environment and establishing table relationships. - Ensure that data governance principles, such as data lineage and quality, are maintained during the modelling process. 5- Validation or Evaluation Phase: - Quality assurance testing is a critical aspect of data governance. Ensure that data quality is maintained throughout this phase. - Perform thorough validation and evaluation of the models and data to ensure they meet the required standards.

5

0

Cross-Industry Standard Process for Data Mining (CRISP-DM)

Data mining is the process of discovering meaningful and valuable patterns, trends, or knowledge from large volumes of data. It involves using various techniques and algorithms (Data Visualization, Descriptive Statistics, Deep Learning, Sentiment Analysis ect) to analyze data, uncover hidden patterns, and extract useful information. Data mining is a multidisciplinary field that combines techniques from statistics, machine learning, database management, and domain expertise. CRISP-DM is valuable in data analysis because it provides a structured, adaptable, and collaborative approach to solving real-world business problems using data-driven insights. It helps organizations maximize the value of their data and analytics efforts. The Cross-Industry Standard Process for Data Mining (CRISP-DM) is important in data analysis for several key reasons: 1. Structured Approach: CRISP-DM provides a structured and well-defined framework for approaching data mining projects. It guides analysts through a systematic process from understanding the business problem to deploying actionable solutions. 2. Flexibility: It is a flexible methodology that can be adapted to different industries and types of data analysis projects, making it widely applicable. 3. Iterative Nature: CRISP-DM acknowledges the iterative nature of data analysis. It allows for revisiting and refining earlier stages as new insights are gained, ensuring that the analysis remains aligned with business goals. 4. Collaboration: It promotes collaboration among stakeholders, including business experts, data scientists, and IT professionals, fostering a cross-functional approach to problem-solving. 5. Efficient Resource Allocation: By defining clear phases and objectives, CRISP-DM helps allocate resources effectively and ensures that efforts are focused on achieving the desired outcomes. 6. Risk Mitigation: It helps identify potential risks and challenges early in the process, allowing for proactive risk mitigation strategies. 7. Model Evaluation: CRISP-DM emphasizes the importance of evaluating and comparing models, ensuring that the chosen models are the most effective for solving the problem. 8. Business Impact: Ultimately, CRISP-DM aims to deliver actionable insights and models that have a positive impact on business operations, decision-making, and outcomes.

2

0

Data Minimization

Recently, we were assigned to create forms for collecting training feedback and opinions from students which requires accounting for privacy and security measures. The insights below on data minimization will guide us in future assignments to ensure privacy and security standards compliance. Balancing Data Needs and Privacy 1. Clearly Define Objectives: Before designing your data collection form, clearly understand what you're trying to achieve. This will help you pinpoint the types of data that are necessary for your project. 2. Least Privilege Principle: Apply the concept of 'least privilege' to data collection. Just as you would only give employees access to the information necessary to perform their jobs, only collect the data points needed for your analytical objectives. 3. Anonymization and Pseudonymization: Where possible, use techniques to de-identify personal information so that the data can no longer be used to identify individuals directly. This might allow collecting more data while still complying with privacy laws. 4. Consent and Transparency: Ensure users are informed about what data is being collected and how it will be used. Obtain explicit consent for the same. 5. Data Retention Policy: Only store the data for as long as it is needed for the intended purpose. Implement automatic deletion or anonymization protocols for data that is no longer needed. 6. Review and Audit: Regularly review data collection forms and procedures to ensure they comply with existing laws and are aligned with your current data needs. This review should be documented and made available for audit. 7. Dynamic Forms: Consider designing active forms that ask for additional information only when required. This technique can minimize unnecessary data collection. By aligning our data collection strategy with privacy laws, we can build trust with stakeholders and stay ahead of compliance issues, thereby gaining a distinctive advantage in your professional journey.

7

0

1-10 of 41

@sunday-idowu-1789

A seasoned Cybersecurity, GRC, and Data Analyst, I possess extensive expertise in utilizing data-driven techniques to maximize business performance

Active 729d ago

Joined Jun 30, 2023