Activity

Mon

Wed

Fri

Sun

Nov

Dec

Jan

Feb

Mar

Apr

May

Jun

Jul

Aug

Sep

What is this?

Less

More

Memberships

Home Lab Explorers

810 members • Free

9 contributions to KubeCraft (Free)

Favorite programming language?

Hello friends, What is your favorite programming language? Why?

What is your goal?

Hello friends, Can I ask you a question? What is your main goal? What are you focusing on right now? 1. Share your goal below 2. Find someone with a similar goal and reply to him or her Let's support each other in this community! 🫡 Mischa

2 likes • 14d

My main goal (1 year goal) that I would like to achieve in 2026 is to switch careers from a full time Web Fullstack/Frontend Engineer to a full time DevOps Engineer. This is essentially why I joined this wonderful community this month, and I look forward to my growth and others' at KubeCraft 😊 My short term goal (1 month goal) is to: - practice touch typing every day on https://www.keybr.com/, - learn Vim very well so that it is the way I write anything on my machine, code, notes, articles, ..., everything - learn Linux on a deeper level (Arch linux, btw 😜)

GPT-5 is here and your DevOps job is safer than ever

GPT-5 launched this week with the usual fanfare and “revolutionary breakthrough” claims. The reality? “Overdue, overhyped and underwhelming” – that’s how AI researcher Gary Marcus described it just hours after launch. THE BRUTAL X REALITY CHECK Within hours of the GPT-5 livestream, prediction markets told the real story. OpenAI’s chances of having the best AI model dropped from 75% to 14% in one hour. Users on X flooded the platform with harsh reactions. They called it a “huge letdown,” “horrible,” and “underwhelming.” GPT-5 even gave wrong answers when asked to count letters in “blueberry.” WHY AI AGENTS WILL NOT REPLACE YOUR DEVOPS WORK, FOR NOW Gary Marcus predicted exactly what we’re seeing. His analysis shows why AI agents pose zero threat to DevOps professionals. The key issue is that current AI systems work by copying patterns, not real understanding. Marcus calls this “mimicry vs. deep understanding.” AI can copy the words people use to complete tasks. But it has “no concept of what it means to delete a database.” This matters for DevOps work. When you debug a networking issue between services, you don’t just run commands. You form ideas about how systems behave under load. An AI might know kubectl get pods syntax. But it doesn’t understand why pod networking fails. It doesn’t grasp what this means for other services in your environment. Marcus notes that complex tasks involve multiple steps. DevOps work has many steps: deploy, monitor, check results, maybe rollback. And this is why AI agents are not going to replace us anytime soon. Large Language Models (LLM's) are relatively simple input-output systems. They are useful, but the problem is that their output is unreliable. So far it is nearly impossible to make an LLM reliably give the same output in the same format, especially when the input can vary wildly. Since DevOps work at its core always has complex tasks with multiple steps, one mistake in the chain could cause a system-wide outage.

0 likes • 24d

I have been feeing similarly for some time now; I know some small/mid size tech companies that are so insecure now because of AI that they are overloading their teams and setting very high expectations for feature delivery and POC/MVP delivery. Suddenly, the engineering work that used to be fun, enjoyable and fulfilling (because you learn from experimenting, producing high quality engineering work, and grow as an engineer) is becoming less and less inside these companies. They feel that “Someone could easily vibe-code their product that they built over the years in a few days”. So, they expect developers to ship and iterate very fast, paying almost no attention to output quality. I personally see the value of AI in my workflow as a productivity booster for the mundane and repetitive tasks that require very little cognitive resources from me. Another use case when I find it really helpful is for exploring things that I am not experienced with. However, this type of companies fail to see that it is a hype, and they don’t realize the amount of bloat generated by AI that keeps piling in the codebase will make their products very hard to maintain and scale in the future (even with AI) because of the sudden increase in expected velocity. They see that every developer should be able nowadays to be a 10x developer.

Arch is good, Omarchy makes it a little better.

I've been running Arch Linux since start of this year and I've loved it. I previously had Fedora 32 and got frustrated because it kept breaking. Before that i was a SUSE guy (still love that distro) but that had issues too. Decided to try Arch and oh wow! it has been the most stable Linux distro i have ever run and there's probably an answer in the Arch wiki for most problems. Only issue i had was getting Wayland and Hyperland working. Then i discovered Omarchy. its essentially an install script but i think with their latest release its no also a full distro. Its the stability of Arch with Wayland+Hyperland working! I still recommend learning to do a proper Arch install, but if you have time or are just curious take a look at Omarchy. One other thing from using Ormarchy i was inspired so i'm currently learning how to write my own install and setup script.

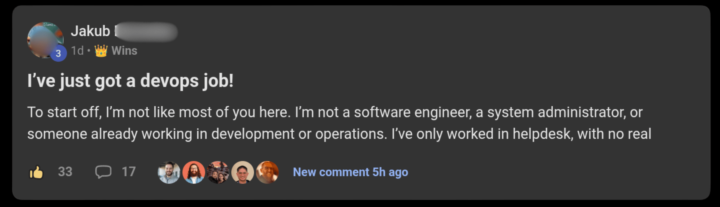

2 new DevOps offers last week (screenshots inside)

Two KubeCraft members just landed DevOps roles. Here’s what they did and what you can copy. Jakub (Helpdesk → DevOps Engineer 60 days after joining KubeCraft) - Built Arch Linux from scratch (multiple times) - Stood up a full Kubernetes homelab - Earned Azure Administrator (AZ‑104) - Practiced 10+ hrs/day for 2 months Result: Offer after 1 month of searching. Interviews felt easy because he’d already implemented the stack. Get the KubeCraft Career Accelerator + mentorship → GO HERE Milan (QA → DevOps/Platform via internal) - Installed Arch the hard way; built K8s homelab - Earned LPIC‑1, polished LinkedIn (500+ connections) - Blogged on Substack/X; applied internally with warm relationships Result: Offer signed. Internal move unlocked by skill + visibility. The pattern: - Ship real builds (Linux, K8s, Terraform, CI/CD, Git, Python) - Show proof of work; raise belief and confidence - Pick the right path (external vs internal) and go all‑in We opened 5 September start spots to mentor the next wins. See if you’re a fit → APPLY NOW P.S. Watching tech tutorials will not land you jobs. We teach you how to market yourself and how to handle interviews with confidence. If you’re sick of getting rejected, APPLY HERE to see if you qualify for our mentorship.

1-9 of 9

@mahmoud-elnaggar-9139

Web Engineer, want to switch to DevOps Engineer

Active 22m ago

Joined Sep 4, 2025

ISTJ

Amsterdam

Powered by