Activity

Mon

Wed

Fri

Sun

Feb

Mar

Apr

May

Jun

Jul

Aug

Sep

Oct

Nov

Dec

Jan

What is this?

Less

More

Memberships

AI Architects

2.7k members • Free

AI Automation Society

226.1k members • Free

The AI Agent

36 members • $37/m

5minAI

2.9k members • Free

8 contributions to 5minAI

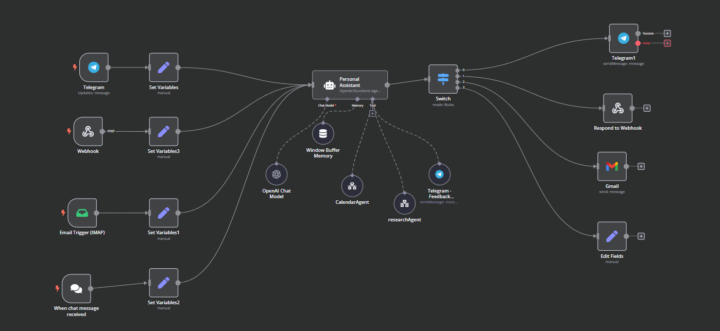

Multiple inputs, howto set variables?

Hi, Seems simple but couldn't figure it out yet: I want to use the same flow for different inputs (Telegram, webhook, chat, mail). Screenshot below to clarify (I can only refer in AI Agent to one variables node). Please advise, thanks!

0 likes • Jan '25

I'll give it a go, but I would have like 4 inputs. Guess that wouldnt matter. Also the fact that a merge happens with empty inputs/nodes which did not execute isn't causing a problem? Again, did you try it yourself? Last solution you said would work did not. Appreciate your input but are just guessing or do you know it works?

Nuc for running open source LLMs, Docker 24/7

**Hi everyone,** I recently purchased the **GMKtec NUCBox K9** (specs below) with the goal of running **70B open-source LLMs** locally. For around **$1,200**, this mini PC packs a punch with its **56W power efficiency**, making it a great choice for a dedicated AI/LLM assistant. My plan is to use it as a **24/7 personal assistant** for tasks like: - **News aggregation** and summarization. - **Vectorizing and updating** all my personal data into **Qdrant** for semantic search. - Running **HyperV**, **Docker** (for n8n workflows, Ollama, Open-WebUI, and TTS models), and other AI tools. One thing I didn’t fully realize is that the **70B model benchmarks** I saw weren’t tested with **Ollama** or **LM Studio**. However, there’s a **driver for the Intel GPU**, so I’m hopeful it’ll perform well. I’ll keep experimenting and see how it goes. **Long-term goal:** I want to create a **"Her"-like AI assistant** that I can talk to (with **TTS** support) and has **infinite memory** to remember all our interactions and learn from them. This NUC is my first step toward building that dream setup. **Questions for the community:** - Does anyone have a **similar setup** or experience with running large models on mini PCs? - Any tips or feedback on optimizing performance for this kind of workload? - Curious to hear about your approaches and ideas! Here’s the link to the NUC I bought: [GMKtec NUCBox K9](https://www.gmktec.com/products/intel-ultra-5-125h-mini-pc-nucbox-k9?srsltid=AfmBOoqh67RvSXhq8XTR6d7gv0Jh16x3OI8kzTyXwy7671PdEF5iLTXm&variant=2c517a3e-15dd-4cfc-a862-41dc0a7da684) Looking forward to your thoughts and suggestions! J

AI agents 101 by Google

Google has released a new guide for anyone who wants to understand how AI agents work - the 42-page tutorial lays out all the background, theories and concepts in a nutshell. Literally everything you need to know: - AI agents, components, and cognitive architectures. - Tools: extensions, functions and data warehouses. - Learning techniques to improve AI agent performance. - Building AI agents on LangChain and LangGraph. Link: https://www.kaggle.com/whitepaper-agents

n8n Globals

Anybody used this yet? Apparently it give open source n8n possibility to use global variables. Not very clear to me howto use it but seems added value: umanamente/n8n-nodes-globals: N8N community node that allows users to create global constants and use them in all their workflows Appreciate your feedback! J

Structured output in AI Agent?

Hi, This video shows to use structured output parser and also some other tips. Master Multi-AI Agent Workflows in N8N | Unlock the Secrets to Advanced Automation Would appreciate your view/take on that. I do have concerns when publishing output to WordPress which is different than LinkedIn or X. Is the output parser then the way to go? Thanks for your input! J

1-8 of 8