Activity

Mon

Wed

Fri

Sun

Mar

Apr

May

Jun

Jul

Aug

Sep

Oct

Nov

Dec

Jan

Feb

What is this?

Less

More

Owned by Guerin

Master AI use cases from legal & the supply chain to digital marketing & SEO. Agents, analysis, content creation--Burstiness & Perplexity from NovCog

Memberships

AI Local SEO & Lead Generation

997 members • Free

Vibe Coder

356 members • Free

AI Money Lab

51.6k members • Free

Turboware - Skunk.Tech

31 members • Free

Ai Automation Vault

15.5k members • Free

AI Automation Society

249.7k members • Free

CribOps

52 members • $39/m

AI Marketeers

214 members • $20/m

Skoolers

190.1k members • Free

59 contributions to Burstiness and Perplexity

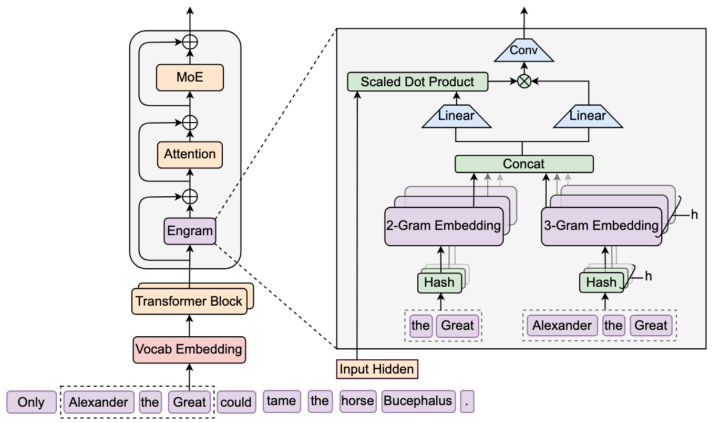

DeepSeek’s engram

I missed this one, with all my focus on context management tech and the whole stochasticity (a word I struggle to pronounce, much less type) of inference. But it is January, which means the folks at DeepSeek have made more key architecture improvements. I’ll just let them tell you (and why is their English so much better than so many native speakers). I‘ll be honest, it’s going to take me a couple days to make sense of this, but my analysis will eventually be in the Burstiness and Perplexity Skool group (bleeding edge classroom): “While Mixture-of-Experts (MoE) scales capacity via conditional computation, Transformers lack a native primitive for knowledge lookup, forcing them to inefficiently simulate retrieval through computation. To address this, we introduce conditional memory as a complementary sparsity axis, instantiated via Engram, a module that modernizes classic 𝑁-gram embedding for O(1) lookup. By formulating the Sparsity Allocation problem, we uncover a U-shaped scaling law that optimizes the trade-off between neural computation (MoE) and static memory (Engram).” #deepseek #engram #aiarchitecture #hiddenstatedriftmastermind

1

0

Google’s Managed MCP and the Rise of Agent-First Infrastructure

Death of the Wrapper: Google has fundamentally altered the trajectory of AI application development with the release of managed Model Context Protocol (MCP) servers for Google Cloud Platform (GCP). By treating AI agents as first-class citizens of the cloud infrastructure—rather than external clients that need custom API wrappers—Google is betting that the future of software interaction is not human-to-API, but agent-to-endpoint. 1. The Technology: What Actually Launched? Google’s release targets four key services, with a roadmap to cover the entire GCP catalog. • BigQuery MCP: Allows agents to query datasets, understand schema, and generate SQL without hallucinating column names. It uses Google’s existing “Discovery” mechanisms but formats the output specifically for LLM context windows. • Google Maps Platform: Agents can now perform “grounding” checks—verifying real-world addresses, calculating routes, or checking business hours as a validation step in a larger workflow. • Compute Engine & GKE: Perhaps the most radical addition. Agents can now read cluster status, check pod logs, and potentially restart services. This paves the way for “Self-Healing Infrastructure” where an agent detects a 500 error and creates a replacement pod automatically. The architecture utilizes a new StreamableHTTPConnectionParams method, allowing secure, stateless connections that don’t require a persistent WebSocket, fitting better with serverless enterprise architectures. 2. The Strategic Play: Why Now? This announcement coincides with the launch of Gemini 3 and the formation of the Agentic AI Foundation. Google is executing a “pincer movement” on the market: 1. Top-Down: Releasing state-of-the-art models (Gemini 3). 2. Bottom-Up: Owning the standard (MCP) that all models use to talk to data. By making GCP the “easiest place to run agents,” Google hopes to lure developers away from AWS and Azure. If your data lives in BigQuery, and BigQuery has a native “port” for your AI agent, moving that data to Amazon Redshift (which might require building a custom tool) becomes significantly less attractive.

Evidence Map: LLM Technical Phenomena & Research Status

I've compiled an evidence map covering 8 critical LLM technical phenomena that affect content generation, SEO, and AI-driven optimization strategies. Here are the key research findings: **8 Technical Phenomena Covered:** 1. **KV Cache Non-Determinism** - Batch invariance breaks cause same prompts to return different outputs at temperature=0. GPT-4 shows ~11.67 unique completions across 30 samples. 2. **Hidden State Drift & Context Rot** - Performance degrades 20-60% as input length increases. Middle content gets ignored (40-70% vs 60-85% for shuffled content). 3. **RLHF/Alignment Tax** - Alignment training drops NLP benchmark performance 5-15%. Healthcare, finance, and legal content get selectively suppressed. 4. **MoE Routing Non-Determinism** - Sparse MoE routing operates at batch-level; tokens from different requests interfere in expert buffers. 5. **Context Rot (Long-Context Failures)** - "Lost in the middle" phenomenon: mid-context ignored even on simple retrieval. NIAH benchmarks misleading vs real-world tasks. 6. **System Instructions & Prompt Injection** - No architectural separation between system prompts and user input. All production LLMs vulnerable. 7. **Per-Prompt Throttling** - Rate limiting (TPM not just RPM) indirectly reshapes batch composition, affecting output variance. 8. **Interpretability Gap** - Polysemantic neurons, discrete phase transitions, and opaque hallucination sources remain unexplained. **10 Key Takeaways for SEO/AEO/GEO:** • Non-determinism is structural, not a bug • Long-context reliability is partial (20-60% degradation past 100k tokens) • Middle content gets ignored—front-load critical info • Distractors harm LLM citations 10-30% • Alignment suppresses valid healthcare/finance/competitive content • Reproducibility requires 2x+ slower inference • Interpretability incomplete—we don't fully understand citation behavior • 42% citation overlap between platforms (platform-specific optimization needed) • RAG wins over parametric (2-3x more diverse citations)

2

0

Recursive Language Models: A Paradigm Shift

Recursive Language Models: A Paradigm Shift in Long-Context AI Reasoning On December 31, 2025, researchers from MIT published a breakthrough paper introducing Recursive Language Models (RLMs), a novel architecture that fundamentally reimagines how large language models process extremely long contexts. Rather than expanding context windows—an approach that has proven expensive and prone to quality degradation—RLMs treat long prompts as external environments accessible through programmatic interfaces, enabling models to handle inputs up to 100 times larger than their native context windows while maintaining or improving accuracy at comparable costs.[arxiv +3] This innovation arrives at a critical inflection point. The AI agents market is projected to explode from $7.84 billion in 2025 to $52.62 billion by 2030—a compound annual growth rate of 46.3%. Yet enterprises face a stark adoption paradox: while 95% of educated professionals use AI personally, most companies remain stuck in experimentation phases, with only 1-5% achieving scaled deployment. The primary bottleneck? Context engineering—the ability to supply AI systems with the right information at the right time without overwhelming model capacity or exploding costs.[brynpublishers +5] RLMs directly address this infrastructure challenge, positioning themselves as what Prime Intellect calls “the paradigm of 2026” for long-horizon agentic tasks that current architectures cannot reliably handle.[primeintellect] The Context Crisis: Why Traditional Approaches Are Failing The Limits of Context Window Expansion The AI industry has pursued a straightforward strategy for handling longer inputs: make context windows bigger. Context windows have grown approximately 30-fold annually, with frontier models now claiming capacity for millions of tokens. Gemini 2.5 Pro processes up to 3 hours of video content; GPT-5 supports 400,000-token windows.[epoch +2] Yet this brute-force scaling encounters three fundamental problems:

Disrupt the Long-Context LLM

How Sakana AI's DroPE Method is About to Disrupt the Long-Context LLM Market The Japanese AI research lab has discovered a way to extend context windows by removing components rather than adding them—challenging the "bigger is better" paradigm in AI development. The $82 Billion Context Window Problem The large language model market is projected to reach $82.1 billion by 2033, with long-context capabilities emerging as a key competitive differentiator. Enterprises are demanding models that can process entire codebases, lengthy legal contracts, and extended conversation histories. Yet there's a fundamental problem: extending context windows has traditionally required either prohibitively expensive retraining or accepting significant performance degradation. Most organizations assumed these were the only options—until now. A Counterintuitive Breakthrough Sakana AI, the Tokyo-based research company founded by "Attention Is All You Need" co-author Llion Jones, has published research that fundamentally challenges conventional wisdom. Their method, DroPE (Drop Positional Embeddings), demonstrates that the key to longer context isn't adding complexity, but strategically removing it. The insight is elegantly simple: positional embeddings like RoPE act as "training wheels" during model development, accelerating convergence and improving training efficiency. However, these same components become the primary barrier when extending context beyond training lengths. The Business Case: 99.5% Cost Reduction Here's what makes this revolutionary from a business perspective: Traditional long-context training for a 7B parameter model costs $20M+ and requires specialized infrastructure. DroPE achieves superior results with just 0.5% additional training compute—roughly $100K-$200K. This 99.5% cost reduction democratizes long-context capabilities, enabling: - Startups to compete with well-funded labs - Enterprises to extend proprietary models without massive investment - Research institutions to explore long-context applications previously out of reach

1

0

1-10 of 59

@guerin-green-9848

Novel Cognition, Burstiness and Perplexity. Former print newspaperman, public opinion & market research and general arbiter of trouble, great & small.

Active 1d ago

Joined Jan 20, 2025

Colorado

Powered by