Activity

Mon

Wed

Fri

Sun

Jan

Feb

Mar

Apr

May

Jun

Jul

Aug

Sep

Oct

Nov

Dec

What is this?

Less

More

Owned by Dariusz

Memberships

AI with Apex - Max aka Mosheh

85 members • Free

WotAI

467 members • Free

Strefa Akademii Automatyzacji

519 members • Free

Flow State

1k members • Free

AI Automation Circle

5.1k members • Free

Scrapes.ai - Foundations

3.8k members • Free

AI Automation Builders

152 members • Free

Early AI-dopters

772 members • $59/month

AI Architects Pro

26 members • $1,000

3 contributions to AI Marketing

Kimi K2 Quickstart guide

Kimi is great for tons of marketing tasks... Kimi K2 QuickStart How to get the most out of models like Kimi K2. Suggest Edits Kimi K2 is a state-of-the-art mixture-of-experts (MoE) language model developed by Moonshot AI. It's a 1 trillion total parameter model (32B activated) that is currently the best non-reasoning open source model out there. It was trained on 15.5 trillion tokens, supports a 128k context window, and excels in agentic tasks, coding, reasoning, and tool use. Even though it's a 1T model, at inference time, the fact that only 32 B parameters are active gives it near‑frontier quality at a fraction of the compute of dense peers. In this quick guide, we'll go over the main use cases for Kimi K2, how to get started with it, when to use it, and prompting tips for getting the most out of this incredible model. How to use Kimi K2 Get started with this model in 10 lines of code! The model ID is moonshotai/Kimi-K2-Instruct and the pricing is $1 for input tokens and $3 for output tokens. Python TypeScript from together import Together client = Together() resp = client.chat.completions.create( model="moonshotai/Kimi-K2-Instruct", messages=[{"role":"user","content":"Code a hacker news clone"}], stream=True, ) for tok in resp: print(tok.choices[0].delta.content, end="", flush=True) Use cases Kimi K2 shines in scenarios requiring autonomous problem-solving – specifically with coding & tool use: Agentic Workflows: Automate multi-step tasks like booking flights, research, or data analysis using tools/APIs Coding & Debugging: Solve software engineering tasks (e.g., SWE-bench), generate patches, or debug code Research & Report Generation: Summarize technical documents, analyze trends, or draft reports using long-context capabilities STEM Problem-Solving: Tackle advanced math (AIME, MATH), logic puzzles (ZebraLogic), or scientific reasoning Tool Integration: Build AI agents that interact with APIs (e.g., weather data, databases). Prompting tips

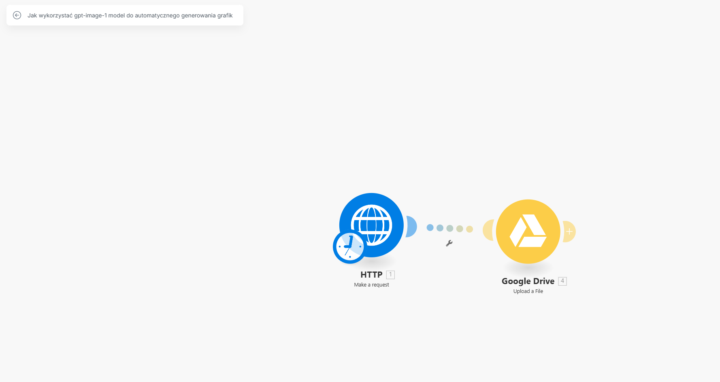

How to use gpt-image-1 model to automatically generate graphics.

Hi. If anyone wants to use the new OpenAI graphics generation model in make.com, here's how. I hope it will be useful. 🙂 Of course, you need to put your own API key, the prompt and the resolution (only available: 1024x1024, 1536x1024 (landscape), 1024x1536 (portrait), or auto).

1

0

Manus access codes

Hi. If anybody is interested, I have an access code to share.

1-3 of 3