Activity

Mon

Wed

Fri

Sun

Mar

Apr

May

Jun

Jul

Aug

Sep

Oct

Nov

Dec

Jan

Feb

What is this?

Less

More

Owned by Dan

Where Healthcare Leaders Master AI Implementation

Memberships

Agentic Labs

628 members • $7/m

WotAI

653 members • Free

Luke's AI Skool

2.2k members • Free

Tech Snack University

16.6k members • Free

Dopamine Digital

5.2k members • Free

Skoolers

190.1k members • Free

AI Agent Developer Academy

2.5k members • Free

Ai Automation Vault

15.5k members • Free

AI Money Lab

52.1k members • Free

14 contributions to Healthcare AI Tools

Build Club is now Healthcare AI Tools — Here's Why

We're renaming this community. Build Club was broad. Too broad. It could have been about anything—software, woodworking, side projects. That's not what we're actually doing here. What we're doing is helping healthcare leaders figure out how to implement AI without wasting six months on pilots that go nowhere. So the new name reflects that: Healthcare AI Tools. What's changing: - Name and branding - Tighter focus on healthcare-specific AI implementation - More structured content around tools that actually work in clinical and operational setting What's NOT changing: - The mission: practical AI guidance, no hype - The community: you're still here, still welcome - The approach: vendor-neutral, experience-based, implementation-focused Why this matters: Most AI communities are run by tech people who've never set foot in a hospital. They don't understand the workflows, the change management challenges, or why that "amazing" tool demo falls apart in real-world healthcare. This community exists because I've spent my career leading healthcare organizations through transformations—and now through AI integration. That's the lens everything here passes through. What's coming: - Weekly AI tool breakdowns (what works, what doesn't, and why) - Implementation playbooks you can actually use - Case studies from real healthcare organizations If you're a healthcare executive, clinical leader, or health tech professional trying to make sense of AI—you're in the right place. Welcome to Healthcare AI Tools. Questions? Drop them below. — Dan

1

0

Using Notion Agent to Organize Your Life

Just published: I Hired a $24/Month Employee Who Runs My Entire Business Tuesday afternoon. 47 unread emails. Three client meetings. Notes scattered everywhere. Asked my assistant to organize it. 14 minutes later: Filed, linked, summarized. The assistant? Notion AI agent. Cost: $24/month. The insight: Everyone's focused on AI creating more content. The real problem? Organizing all the content AI helps you create. You're generating 10x faster. Capturing every meeting. Researching in minutes. But where does it all go? What I cover in the article: Why "second brain" systems fail (and what finally worked) The actual bottleneck killing executive productivity in 2025 How AI agents changed the game from creation to curation The "$24 question" that reframes how you think about AI pricing My complete system for managing clients, projects, and content The bottom line: I've evaluated thousands of AI tools as a healthcare AI consultant. This is the best integration of AI into an existing platform I've seen. Not because it's powerful. Because it's indispensable. Read the full breakdown: https://blog.rockettools.io/i-hired-a-24-month-employee-who-runs-my-entire-business/ Have you solved the organization problem yet? What's working for you?

0

0

Google just dropped a game-changer: Context URLs

I spent the last 3 hours building something in n8n that would've taken me 3 days to code from scratch. No web scraping. No API limits. No blocked requests. Here's what happened: Google quietly released Context URLs - a way to pull structured content from ANY website without traditional scraping. Think of it as giving AI agents x-ray vision for web content. In my n8n demo, I'm pulling real-time data from a sites that normally block automated requests. The Context URL feature treats it like a human browser session, but returns structured JSON that my AI agents can actually understand. The workflow I built: - Input any website URL - Google's Context API extracts the meaningful content - n8n processes it through my custom AI agent - Output: Clean, structured data ready for analysis What used to require complex proxy rotations and headless browsers now takes 3 nodes in n8n. Success rate? Not 100%. But, it still works great! No rate limits hit. No captchas triggered. No IP blocks. The real power isn't just avoiding scraping headaches - it's that Context URLs understand the semantic structure of pages. For anyone building automation workflows, this changes everything. You can now build reliable data pipelines from sites that were previously off-limits. Who else has tried Context URLs yet? What's your biggest challenge with web data extraction that this might solve?

0

0

🚀 N8N Just Changed the Game with Data Table

Quick heads up on something that's about to save you hours (and probably thousands in infrastructure costs). N8N just released Data Tables, and I've spent the last 24 hours going deep on this. What this means for us: You can now store and manage data directly inside your N8N workflows. No external database needed. No SQL knowledge required. No separate infrastructure to maintain. Real build I just tested: Created a complete lead scoring system that: - Captures form submissions - Stores contact data in N8N's native table - Automatically enriches with API data - Scores leads based on behavior Time to build: 22 minutes. Cost: $0 extra. Technical details that excited me: - Full CRUD operations (Create, Read, Update, Delete) - REST API access to your tables - Real-time syncing - Export to any format - Query from any node in your workflow For the solopreneurs here: Test your MVP ideas without technical debt. I watched a founder yesterday replicate what would normally be a $5k/month database setup in about an hour. Challenge for the community: What's one manual process in your workflow that you could eliminate if your data lived inside your automations? Drop your use case below. Who's already playing with this? Share your experiments!

0

0

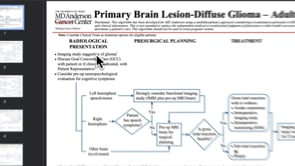

Creating a Searchable Database with Exact Citations

I just built something that completely changes how we access clinical knowledge. You know that frustration when you need exact language from a clinical guideline, but you're digging through 200-page PDFs? I connected an AI agent in n8n directly to a Pinecone vector database containing uploaded clinical algorithms. Now I get precise citations and exact language from any document in seconds, not hours. The best part? I recorded the entire build process from scratch. In 18 minutes, you'll see exactly how to: - Set up the n8n workflow with Pinecone integration - Configure the vector store for clinical documents - Build the agent that delivers exact citations - Test it with real clinical algorithms - This isn't theory. I've been using this system daily at RocketTools for client work, and it's cut my research time by 80%. The precision is incredible - it doesn't just give you "related content." It gives you the exact paragraph, page number, and context you need for compliance documentation. Watch the full build here: https://vimeo.com/1120642938/d18fc27121?share=copy Who else is building AI agents for healthcare workflows? What's your biggest challenge with clinical documentation?

0

0

1-10 of 14

@dan-mccoy-9435

Storytelling consultant for 30 years now building AI and Chatbots

Active 8d ago

Joined Jul 25, 2025