Activity

Mon

Wed

Fri

Sun

Oct

Nov

Dec

Jan

Feb

Mar

Apr

May

Jun

Jul

Aug

Sep

What is this?

Less

More

Owned by Amirthan

Currently: Free. A vibecoding community to launch AI apps—no matter your background. Build, learn, and ship with the latest tools and real support.

Straight path to land AI Engineer job

Memberships

Evergreen Challenge Cohort

26 members • Paid

Skoolers

174.7k members • Free

4 contributions to Cursor Skool

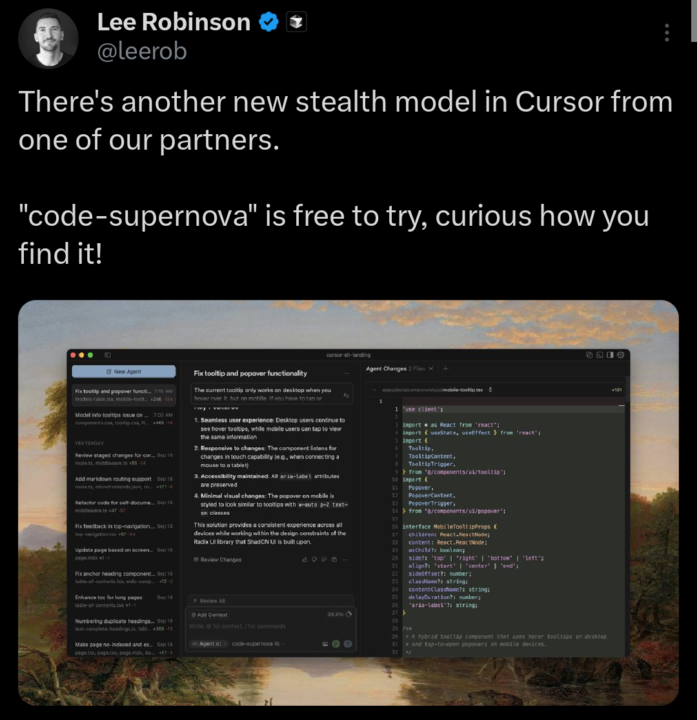

code-supernova stealth model in Cursor

There's a new stealth model in Cursor called "code-supernova" and it is free to try! People are speculating that it is a new model from Anthropic, maybe Sonnet 4.1 Give it a spin and let me know how you like it Image source

Documentation changes now that we have Cursor and LLMs

"Just ask grok-code-fast-1" Quick thought that came to mind: I'm setting up deployment for an application and needed to define environment variables for it. I had no idea what environment variables the project had or what I should set. It's a new project so I couldn't even look it up from the documentation. So I just asked grok-code-fast-1 to go through the code and list all the environment variables that are used in the codebase. Couple seconds later I had a list of the environment variables. My first instinct was "I should document these somewhere" But that got me thinking: why? Why can't I just do the same thing next time I need to know the environment variables? Documentation gets out of date while the code is always correct. So if possible, I feel like I should prefer just asking the documentation-related questions directly from AI, especially now that we have a blazing-fast, ridiculously cheap model to comb through the code. Which means that in the future, documentation should focus way more on the things that can't be figured out just by looking at the code. Design decisions, tradeoffs, reasonings, specifications, and so on. This kind of documentation also helps the model when developing software. Side question that I have here: is there a way for Cursor to do the same without context pollution? Could Cursor Agent somehow ask Grok to go through the code, look for the environment variables, and just return a summary so that the main Agent doesn't have to read all the code? What do you think? Give me your thoughts in the comments

1 like • 20d

Yeah, I also don't read any more documentations. Asking AI straight is the best option. But at work I had to create documentation for other teams so I implemented a system which will prompt the agent to automatically update the documentation based on changes, you can manually trigger the prompt also to make it update the documentation now and then. Same can be done in cursor with rules, if someone prefers old school documentations. But my preferred style is the same as you mentioned. I don't have the patients to read :D. But only one caviar here is we will waste AI message quotas.

Grok Code Out Now (+ Free Week!)

A new Grok model, grok-code-fast-1, is now available in Cursor! It is fast and has competitive pricing ($0.2/1M input, $1.5/1M output). It is free for one week. Feel free to try it out! Source

Thoughts on Cursor Pricing updates

When we first started, Cursor pricing was pretty simple: 500 fast requests per month + unlimited slow requests. 1 chat message was 1 request. Simple and straightforward. This means that you could potentially spend hundreds or thousands of dollars on AI and only pay $20 for it. It looks to me that Cursor has been burning investor money and have give us free tokens at their own loss and made the value for Cursor incredible. Now, looks like that is changing. Cursor has changed the pricing model like 3 or 4 times (can't keep count) during the last few months and at least now it seems we are converging to "API costs + markdown". Basically, if you want more, pay more. Bad news here is we probably need to pay more. The good news (as I see it) are: - With this model, Cursor might actually be sustainable and stick around for the long haul, not just burn through investor money and go bankrupt. - OpenAI has lowered their pricing a lot so for example with GPT-5, we should still get a lot of code written with just the $20 (or more if you are on those plans). - This means better incentives for Cursor: now they can just make the best AI IDE without having to figure out cheap tricks to get their own costs down (chunking, limiting the context, etc)

1-4 of 4

@amirthan-puvaneswaran-7452

I run a community showing you how to vibecode AI apps from ideation to launch.

Active 2h ago

Joined Aug 12, 2025

Finland