5d (edited) • General discussion

Building trust/confidence in AI

AI is not a mysterious black box you’re forced to trust. It’s a tool built on clear rules, guardrails, and human oversight. When you understand how AI works, where its limits are, and how it’s monitored, fear decreases and confidence increases.

Good AI Use

Responsible AI use starts with strong safety boundaries. This means knowing what data should never be shared, which decisions AI should not make alone, and when human review is required.

Regular monitoring and evaluation of AI outputs help catch mistakes early and reduce harm, instead of discovering problems only after something goes wrong.

AI and Ethics

Ethical AI begins with asking, “Who could be harmed or excluded by this result?” It also requires actively testing for bias in both the data and the decisions AI produces.

Transparency builds trust. Being honest about when AI is used, what it can and can’t do, and how decisions are made is far more effective than pretending AI is always correct.

Reliability in AI

Reliable AI isn’t magical. It’s consistent, well-tested, built on good data, and used for clear purposes with human oversight when the stakes are high.

Think of AI as a trained assistant. You test it, provide feedback, refine your prompts and workflows, and continually improve the system instead of handing it full control.

0

0 comments

powered by

skool.com/the-pioneer-lounge-1265

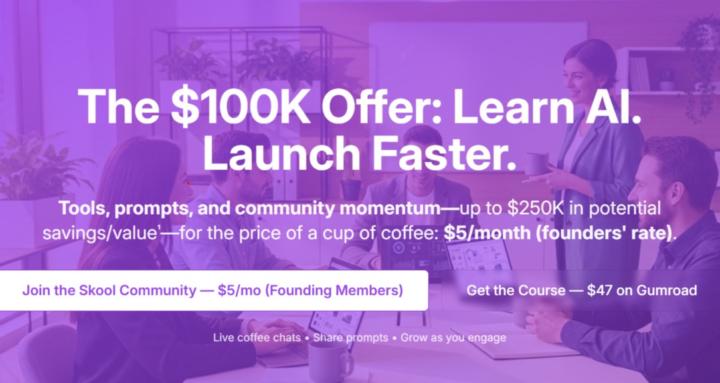

Get access to AI tools, prompts, templates, recordings, and group coaching.

Suggested communities

Powered by