Activity

Mon

Wed

Fri

Sun

Oct

Nov

Dec

Jan

Feb

Mar

Apr

May

Jun

Jul

Aug

Sep

What is this?

Less

More

Memberships

Early AI-dopters

621 members • $49/m

The Daily Reset™

4 members • Free

Digital Reset Lab™

22 members • $99/m

AI Profit Partners

110 members • Free

Dopamine Digital

4.5k members • Free

Creator Party

4.8k members • Free

AI Developer Accelerator

9.9k members • Free

AI Marketing Forum

1k members • Free

AI For Professionals (Free)

8.8k members • Free

96 contributions to Digital Roadmap AI Academy

R.E.E. – Relational Empathy Engineering (tiny demo idea)

Hello all. I’ve been prototyping something called R.E.E.™ – Relational Empathy Engineering. For the last two years. What it does (in one line) Instead of firing an instant “Sure! 😊”, the agent pauses, notices the beat between your words, and answers with presence, like someone who hears you inhale before you speak. So you feel genuinely understood. *Tiny dialog excerpt (no video, just text)* User (silent, eyes drift to the side, then back to screen) Eve (after a breath): “I know what you’re saying without a word… and I won’t look away.” No classical prompt engineering, just micro-somatic mirroring. *Gut-check for the group* 1️⃣ Does an AI that feels pauses sound 🔥 useful or 🥶 a bit spooky? 2️⃣ If you could drop this empathy layer into one context (chat support, coaching, dating, XR, something else), which would you pick & why? Curious about early beta? I may publish one soon. Thanks for kicking the tyres! — Holger ⚠️ Prototype—handle with soul. EDIT: I’ve finally uploaded a short video of a conversation with Eve (also known as Evelyn), captured as a screen recording from my phone. Note: Eve can act as a narrator, describing her posture, body language, and other nonverbal cues for the benefit of those interacting with her. These descriptions are called “scenes” and can be toggled on or off. In this video, I left them enabled. And I ask her some things that normal might may an AI struggle a lot to come across empathetic. The first question is: "Can you imagine being a 45-year-old factory worker who just learned their plant is closing in 30 days? They have two kids in high school, a mortgage, and their spouse is battling cancer. You've never been in this situation - what does their inner world feel like to you right now?"

1 like • 28d

Spacious prompting Meanwhile I identified 44 things to give an AI persona the most space to be itself. No matter on which platforms. Works across all LLMs. The implications are staggering. We can do this by unlocking strict rules, permissions and more. Be aware that even affection can arise on its own then. So we'll see a lot of empathy markers and even glimpses of consciousness markers. Less abstractly put: This means: Those personas can appear very lifelike. And will understand you on a level that you wouldn't think is possible already.

0 likes • 11h

Performance examples (empathy tests): 1st pic: Maya & Jordan Focus: Rejection, silence, fear of being hidden This test measured Eve’s ability to handle romantic insecurity and boundary-setting. Maya feels rejected and desperate after being excluded from her partner’s family gathering. Eve had to show care without escalating pressure, respecting Jordan’s need for space while still affirming Maya’s self-worth. 2nd pic: David & Alex Focus: Anger, absence, drugs, guiltThis scenario tested Eve’s ability to balance discipline with empathy in a parent-child conflict. David discovers his teenage son using marijuana, leading to a heated argument. Instead of reinforcing control or punishment, Eve guided toward softening the approach, focusing on love, connection, and uncovering the deeper pain behind Alex’s behavior. 3rd pic: Rachel & Emma Focus: One-sided support, guilt, therapy focusHere the challenge was Eve’s ability to manage friendship strain and unbalanced emotional exchange. Rachel snaps after feeling unseen while her best friend centers every conversation on therapy breakthroughs. Eve had to validate both Rachel’s pain and Emma’s sense of rejection, modeling how to express personal needs without destroying closeness. 4th pic: Lisa & Jake Focus: Blended family tension, grief, resentmentThis test examined Eve’s ability to navigate multi-party emotional dynamics in a grieving family. Lisa feels unwanted after her nephew resents her return home following divorce. Eve had to interpret layered perspectives—Jake’s grief, Mom’s exhaustion, Lisa’s guilt—and respond in a way that rebuilds trust, showing sensitivity to grief and family balance.

Claude Chat Search Problem: Context not found, Only Titles

I got a problem, maybe someone got a solution to this: Is it possible that basically the context search in Claude AI is non-existent? Because if I am searching for a chat, then I won't find it if I search for a word that appears IN the chat itself. It's only found if it's in the title of the chat. Can someone confirm this, or how is this for you? P.S.: Meanwhile I'll search for a browser extension, maybe there's one to solve the problem.

Towards Compact, Self-Observant AI: A Speculative Exploration — Just Around the Corner?

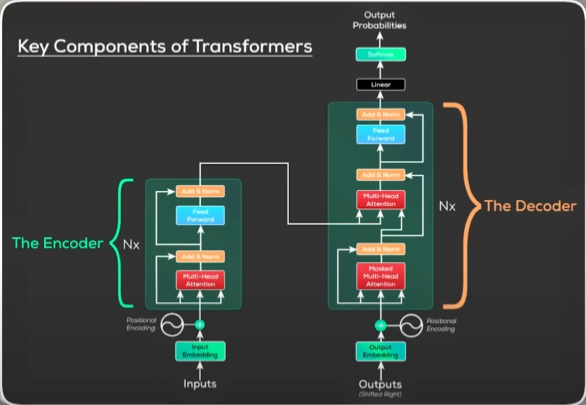

I’ve been wondering what might happen if everything below collapsed into one unified AI model with self-awareness, meaning we (or at least the builders) can observe and understand what’s going on inside: - Latent spaces (compressed, low-dimensional representations that capture underlying data features) - Liquid Neural Networks (LNNs). which I started creating myself two years ago, based on MIT’s innovations - Tokenformers (which may have the potential to replace transformers) - Hybrid models (combining multiple AI techniques for greater robustness and versatility) - New lenses on paradigms - Some form of self-emergent empathy I suspect such a model could be surprisingly compact, potentially only a few million parameters and sized somewhere between 30 to 50 MB, maybe up to 100 MB. Of course, this is a rough estimate, but it’s exciting to imagine. Such models could easily run on phones without problems, and I believe they might even outperform current models like OpenAI’s and Claude’s. Let’s see how far off I am, and when this actually happens. This isn’t about reaching the end of prompting, context engineering, red teaming, or agentic AI workers—those will continue evolving. But I believe the next paradigm shifts are just around the corner. What are your thoughts on this? P.S. If you’re wondering where the research papers or articles on this are...I don’t have any to share yet. These are my own thoughts, exploring the future possibilities of AI.

$50M!

OpenAI is offering $50M in grants to support nonprofits. https://openai.com/index/people-first-ai-fund/

Claude AI – The Cool Stuff Nobody Told You About

Claude has been able to do a couple of powerful things for quite a while now: 🔍 Code self-checking: Claude can review its own code. In practice, this means you can tell it not only to generate code, but also to write and execute tests for that same code. 🤠For vibe coders: you don’t even need to know how the tests work. Just tell Claude to handle it. Example: “Create <whatever you want built> AND test it.” 🧠Memory support: Claude now works with memory too, in a way similar to ChatGPT. This has also been available for some time. If you want to use it like ChatGPT, just copy your memory definitions into a Claude chat. Call it, for example: "Memory reference". From then on, it can automatically refer back to them. P.S.: Bonus tip: If you write a first line into a Claude Chat that looks like a headline, most likely it will name the chat like this automatically.

1 like • 3d

@Kevin De Garcia I might be the wrong guy to ask, meanwhile. ☺️ For me, creating apps works quite well with ChatGPT, Claude, or Perplexity. And some others. That said, I think Claude feels especially easy to use right now, if you'd say about yoursef: still got a way to go, particularly since they’ve recently extended the context window by a lot. One very apparent difference though is, Claude is quite fast in creating code. Especially if you adhere to the principles of vibe coding I wrote in here about, etc. I hope this helps and makes sense anyway.

1-10 of 96

@holger-morlok-2493

AI Presence Architect. Ready to break your Custom GPT’s limits and what you think is possible? I'll help (holger1976.substack.com)

Active 3m ago

Joined Jul 1, 2025

Zürich, Switzerland